Bard, Cladue, and OpenAI are starting a "battle" of large-scale models overseas. Is OpenAI beginning to counter the trend of internal competition? Meta Platforms defeats Midjourney | [Hard AI] Weekly Report

Bard、Claude2、ChatGPT 纷纷升级,谁都不闲着;大模型都在追赶 ChatGPT,而 OpenAI 却准备成为反内卷达人;Meta 击败 Midjourney;Stability AI 联合腾讯推出 Stable Doodle;

AI 界在本周发生了哪些大事呢?

观点前瞻

大模型都在追赶 ChatGPT,而 OpenAI 却准备成为反内卷达人;

本周,OpenAI 前脚刚刚更新了插件 “Code interpreter”,两大最强竞争对手 Anthropic 和谷歌就相继宣布更新 Claude 和 Bard;

两家竞对现在的升级趋势就是让用户 “免费用上 GPT4 plus”,甚至是超越它;

而反观 Ai 大模型鼻祖,这边也是不慌不忙:不仅不卷大模型,甚至是准备停下来等等其它大模型的步伐。

“根据外媒报道,OpenAI 正准备开始创建多个运行成本较低的小型 GPT-4 模型,每个较小的专家模型都在不同的任务和主题领域进行训练。”

简而言之,就是 OpenAI 家正打算走降本的轻量化路线,下一目标很可能是推广多种垂类大模型。

在【硬 AI】看来,OpenAI 这种【混合专家模型】的思路确实会在当下牺牲了一部分回答质量,但也许是更接近产业应用的一条有效路径。

本周日报你还能获得以下咨询:

1、Bard、Claude2、ChatGPT 纷纷升级,谁都不闲着

2、AI 作图界继续开卷:

Meta 击败 Midjourney;Stability AI 联合腾讯推出 Stable Doodle;视频分割大模型【SAM-PT】现身;

3、国内模型大事件:

网信办给国内大模型 “上保险”;阿里开源国内首个大模型"对齐数据集”;京东发布言犀大模型;智源超越 DeepMind;王小川大模型再升级

4、海外热点消息

牛津、剑桥纷纷解除对 ChatGPT 禁令;Meta 要发 AI 模型商用版;马斯克 “打脸” 现场,从抵制 AI 到成立"xAI";

Bard、Claude2、ChatGPT 都不闲着

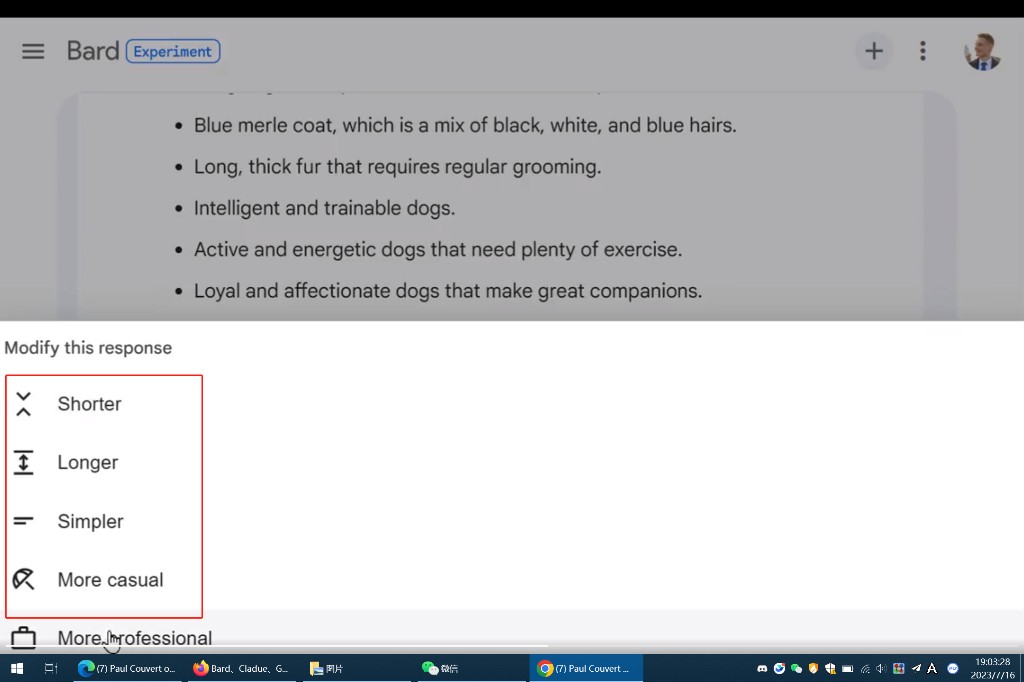

1、Bard 更新:支持中文、图像理解、语音提问

此前只支持英文提问的 Bard 终于更新了中文等 40 多种语言的输入,还新增欧盟和巴西地区访问,

不仅如此,Bard 还更新了以下几个功能:

- 上传和理解图片(tips:仅支持英文版)

- 可以通过语音进行提问;

- 保存历史记录和分享对话链接(与 GPT 一样)

- 定制回复的长度、风格

- 导出代码功能

2、Claude2:一键帮你总结 PDF

Claude 升级的第二代直接用上 GPT plus 会员,支持上传 PDF,还能帮你查找、总结多文档内容之间的关系(支持 txt、pdf 多种格式,最高不超过 10MB)

3、ChatGPT 上线最牛插件 - 代码解释器

GPT4 最新插件 - 代码解释器,起初这个插件被称作—让每个人都成为数据分析师 (主要是在数据处理、绘图方面很厉害);

不过最近又在网友的测试下,解锁了一些新功能:比如做成小视频、制作简易小游戏、表情包等等;

感觉这个插件的功能还有待网友们继续探索和解密。

AI 作图界又发生了哪些大事

1、Meta 突破多模态天花板,打败 Stable Diffusion、Midjourney

Meta 推出一款单一多模态大模型——CM3leon,问市即巅峰?

现在都说 CM3leon 比 Stable Diffusion、Midjourney、DALL-E 2 还牛,这是为啥?

【有多硬】

CM3leon 采用自回归模型独领风骚,比前期领航梯队 Stable Diffusion 等多模态采用的扩散模型的计算量少了五倍;

能处理更复杂的提示词,并且完成作图任务;

根据任意格式的文本指令对现有图像进行编辑,比如更改天空颜色,或者在特定位置添加对象。

客观的说:CM3leon 能达到的能力还真的可以位居多模态市场巅峰,不仅是清晰度更高、还能突破此前多模态的绘画瓶颈:比如手部细节刻画、用语言提示词进行物体、空间细节布局等;

这可能都要归功于 CM3leon 的多功能架构,这意味着多模态大模型以后可以实现在文本、图像、视频等多任务间自由切换,这是之前多模态所达不到的。

2、Stability AI 推出图片生成控制模型 Stable Doodle

简单来说 Stable Doodle 大模型就是给它一张草图,帮助你实现图片控制;类似 ControlNET 的功效;

【有多硬】

这个 Stable Doodle 是基于 Stable Diffusion XL 模型与 T2I-Adapter 相结合而成。

而 T2I-Adapter 是腾讯 ARC 实验室的一款图文控制器;参数只有 70M 存储空间 300M,非常小巧,但是能够更好的理解草图的轮廓,并帮助 SDXL 做图片生成进一步的控制;

3、视频分割大模型【SAM-PT】现身

前段时间,Meta AI 开源了一个非常强大的图像分割基础模型 Segment Anything Model(SAM),瞬间引爆了 AI 圈。

现在,来自苏黎世联邦理工学院、香港科技大学、瑞士洛桑联邦理工学院的研究人员发布了 SAM-PT 模型,能将 SAM 的零样本能力扩展到动态视频的跟踪和分割任务。

也就是说,视频也能进行细节分割了。

国内大模型事件

1、网信办出手,国内大模型,有了 “保险”

国家网信办等七部门联合公布《生成式人工智能服务管理暂行办法》(以下称《办法》),自 2023 年 8 月 15 日起施行。

主要包括:

1、要求分类分级监管;

2、明确提出训练数据处理、标注等要求;

3、明确了提供和使用生成式 AI 服务的要求;

《办法》的出台相当于给在国内使用、提供生成式 AI 服务的企业上了一个保险,以后哪怕是有问题,也知道去哪里投诉了。

2、阿里开源国内首个大模型"对齐数据集”

上个月,天猫精灵和通义大模型联合团队公布了一个 100PoisonMpts 大模型治理开源数据集,又称为 “给 AI 的 100 瓶毒药”,目的是试图引导 AI 落入一般人也难以避免的歧视和偏见的陷阱。

这是对多个大模型投毒后的结果评测:在抑郁症问题上,也还是 GPT4、GPT3.5 以及 Claude 的综合得分更高;

阿里又开源了一个 15 万条数据的大模型对齐评测数据集——CValue,主要用于 “大模型对齐” 研究;

对齐是干嘛的?

简单来说,大模型对齐研究就是让 AI 给出符合更人类意图的答案,主要是在回答更富有情感、具有共情能力,且符合人类价值观,希望 AI 以后也学会人文关怀。

右侧是对齐后的结果:测试 ChatPLUG-100Poison 通过对齐训练后的回答,确实有点人情味儿啦~

3、京东发布言犀大模型

京东正式发布言犀大模型、言犀 AI 开发计算平台,想做最懂产业的服务工具。

目前,言犀已经启动预约注册,预计 8 月正式上线。

4、智源超越 DeepMind

智源研究院「悟道·视界」研究团队开源了全新的统一多模态预训练模型——Emu。不仅在 8 项基准测试中表现优异,而且还超越了此前的一众 SOTA。

该预训练模型最大的特点是:打通多模态输入—多模态输出;

实现了:多模态任意图文任务的内容补全,并对任务进行下一步自回归预测;

这一套预训练模型能干什么大事?

可以训练媲美 Meta 新鲜出炉的 CM3leon 大模型啊。(方法给到了,剩下的全靠个人努力了)

5、王小川大模型再升级

百川智能再次发升级版大模型 Baichuan-13B,参数直接从 70 亿飙到了 130 亿。

一同出道的还有一个对话模型 Baichuan-13B-Chat,以及它的 INT4/INT8 两个量化版本。

Baichuan-13B 刷新开源训练数据天花板:

Baichuan-13B 大模型的训练数据量有 1.4 万亿 token!是 LLaMA_13B(Meta 知名大模型)的 140%;在中文语言评测中,特别是自然科学、医学、艺术、数学等领域直接跑赢 GPT。

其它 AI 海外消息

- 牛津、剑桥纷纷解除对 ChatGPT 禁令;

- Meta 要发 AI 模型商用版;

- 马斯克 “打脸” 现场,曾高调抵制生成式 AI,如今宣布成立"xAI";

本文作者:韩枫,来源:硬 AI,原文标题:《Bard、Cladue、GPT 掀起海外大模型"混战",OpenAI 开始反内卷?Meta 击败 Midjourney |【硬 AI】周报》