The full text of Su Zifeng's speech at CES is here! The era of Yao Byte has arrived, Helios is on stage, "In the next four years, we aim to achieve a 1000-fold increase in AI performance"!

Dr. Lisa Su brought AMD's "AI Full Stack" to CES, boldly declaring the start of the "Yao Byte-Level Computing Era"! To support the future demand of 5 billion users, AMD unveiled the "Computing Monster" Helios platform, pledging a 1000-fold increase in chip performance within four years. With new consumer products outperforming Intel in computing power, AMD is breaking through on two fronts in the cloud, igniting a new round of global computing power arms race

At 10:30 AM Beijing time on the 6th, in Las Vegas, USA, under the spotlight of the global "Tech Spring Festival" - the International Consumer Electronics Show (CES), following NVIDIA CEO Jensen Huang, AMD Chairwoman and CEO Lisa Su took the stage with AMD's AI full suite.

"Since the release of ChatGPT, the number of active users using AI has increased from 1 million to 1 billion, and by 2030, the number of active users using AI will reach 5 billion....." Lisa Su opened by discussing the explosive demand for AI. In response to this trend, AMD's solution is the latest MI455X GPU, and based on 72 MI455X GPUs and 18 Venice CPUs, they have built an open 72-card server called "Helios."

Lisa Su emphasized that the MI455X series has a 10-fold performance improvement compared to the MI355X.

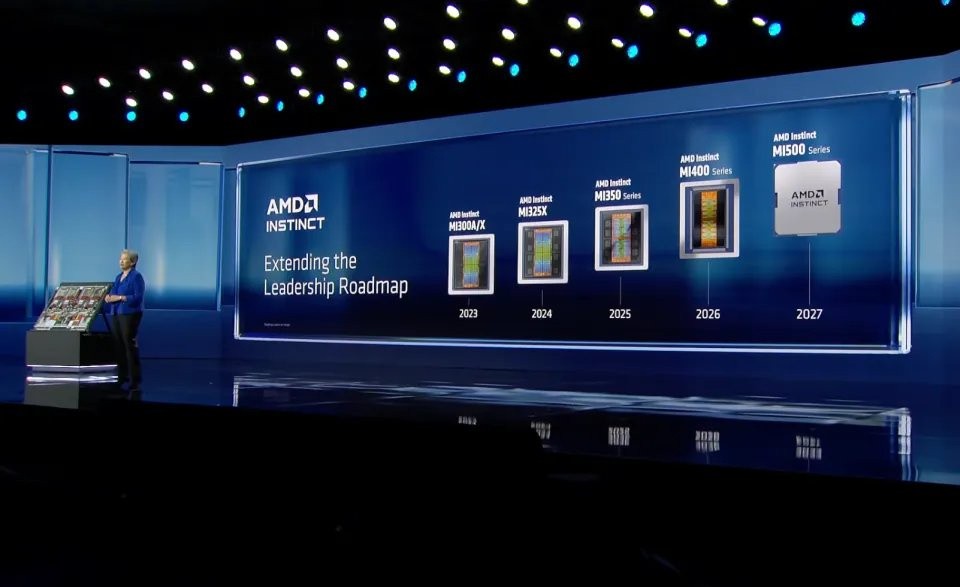

AMD also provided a roadmap for the INSTINCT series GPUs. According to official statements, the MI500, which is set to launch in 2027, will adopt a 2nm process and use HBM4e memory, with potential improvements of up to 30 times compared to the MI455X. "In the next four years, we aim to achieve a 1000-fold increase in AI performance."

Key points of the speech:

Computing Power Goals:

Clearly entering the "Yotta-scale" computing era, with plans to increase global AI computing power from 1 zettaflop per second in 2022 to 10 yottaflops per second in the next 5 years (1 yottaflop = 10²⁴ bytes), a 10,000-fold increase from 2022, to support the demand for AI users to grow from the current 1 billion to 5 billion in the future (covering over half of the global population).

Technical Path:

Through a "full-stack open" strategy, integrating CPU, GPU, NPU, and custom accelerators, covering all scenarios from cloud to edge to personal devices, emphasizing "matching optimal computing power for different workloads," and relying on an open ecosystem (such as industry standards and open-source software) to accelerate innovation.

Helios Rack Platform Leading Large-Scale AI Computing:

Launching the Helios rack-level AI platform, providing the hardware foundation for yotta-scale computing, using "liquid cooling + modular design" (dual-width OCP standard), weighing nearly 7,000 pounds (about 3.2 tons), equivalent to two compact cars.

Equipped with Instinct MI455X GPU (2/3nm process, 320 billion transistors, 432GB HBM4 memory, 10-fold performance improvement over MI355), EPYC "Venice" CPU (2nm process, 256-core Zen 6, memory/GPU bandwidth doubled, optimized for AI), and Pensando 800G Ethernet chip;

Single rack AI computing power reaches 2.9 Exaflops, equipped with 31TB HBM4 memory, expanded bandwidth of 260TB/s, supporting 72 GPUs for unified collaboration, capable of connecting thousands of racks to build ultra-large-scale clusters;

Future plans:

Launch of the Instinct MI500 series in 2027 (CDNA 6 architecture, 2nm process, faster HBM memory), aiming for a 1000-fold increase in AI chip performance within 4 years.

Ryzen AI 400 series as the core of "Universal AI Computing Power":

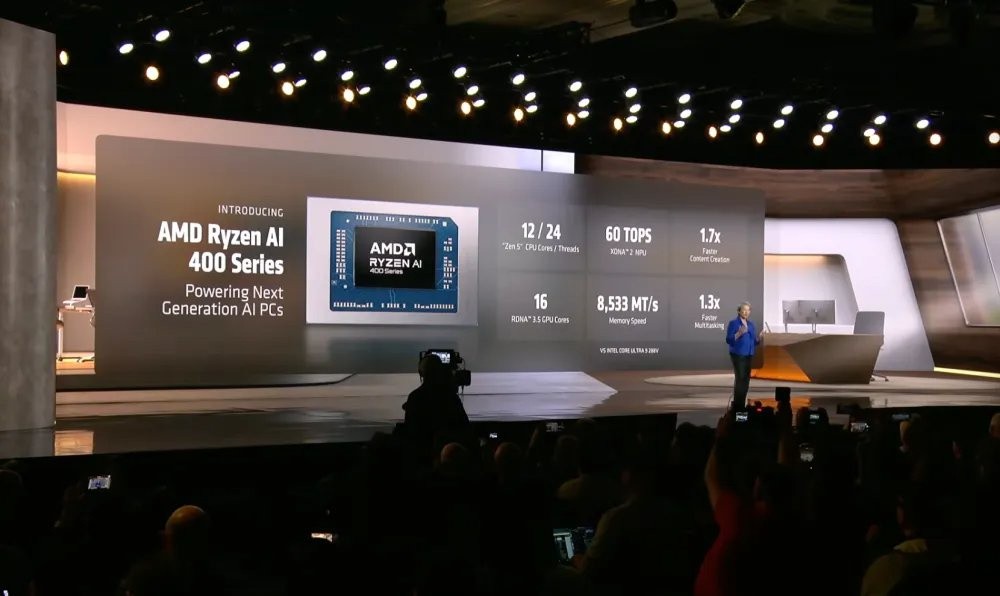

7 consumer-grade models + corresponding Ryzen AI PRO 400 commercial series (launching in Q1), covering 4 cores 8 threads to 12 cores 24 threads, suitable for ultra-thin laptops, performance laptops, and commercial laptops.

The NPU adopts the XDNA 2 architecture, with a computing power of 60 TOPS, a 20% improvement over the previous generation, 10 TOPS higher than Intel's Panther Lake processors (50 TOPS), and a UL Procyon score of 1938 points;

CPU acceleration frequency of 5.2GHz (+100MHz), GPU 3.1GHz (RDNA 3.5), supporting LPDDR5X 8533MHz memory; multitasking performance leads Intel Ultra 9 288V by 29%, content creation (Blender/7-Zip) leads by 71%, 1080P gaming frame rate leads by 12%, video playback endurance up to 24 hours;

“Computing Power Nuclear Bomb”

In response to the prediction that "by 2030, the number of active AI users will reach 5 billion," AMD's solution is the latest MI455X GPU, and based on 72 MI455X GPUs and 18 Venice CPUs, has built an open 72-card server called "Helios."

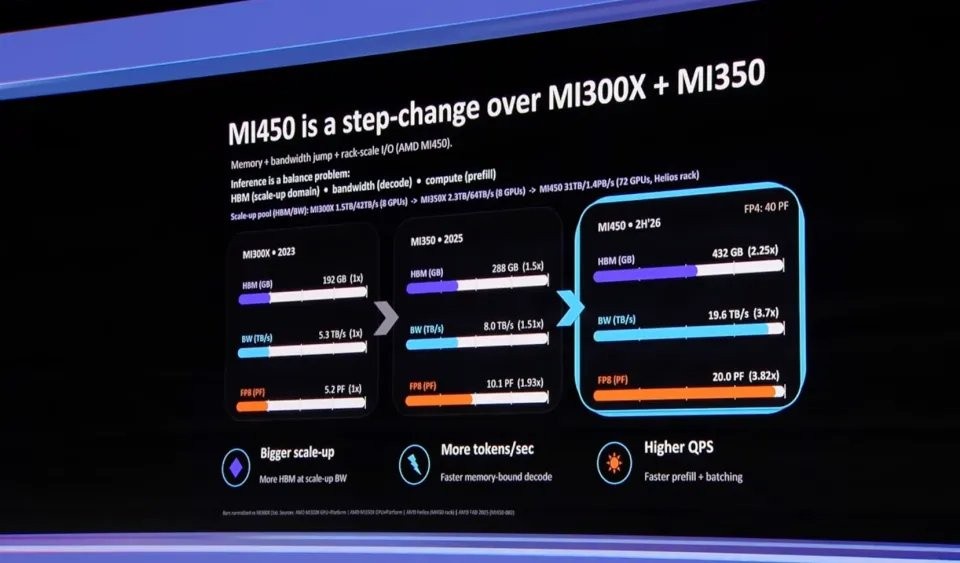

According to AMD, the MI450, which will be launched in 2026, is equivalent to MI300X + MI350, representing a stepwise innovation and performance leap. With the help of HBM memory, it achieves expansion in three dimensions: video memory, bandwidth, and computing power, breaking the "memory wall" limitation of AI inference.

The MI450 provides 20PF of computing power at mainstream FP8 precision, with performance close to four times that of the first generation. Moreover, it can achieve extremely high performance of 40PF at FP4 precision.

AMD also provided the roadmap for the INSTINCT series GPUs. According to official statements, the MI500, which is set to launch in 2027, will adopt a 2nm process and use HBM4e memory, with potential improvements of up to 30 times over the MI455X.

In terms of the Helios rack, according to official information, Helios has a total of 18 computing trays, with each computing tray using 1 Venice CPU and 4 MI455X GPUs.

The Venice CPU uses a 2nm process, totaling 4,600 cores, while the MI455X GPU uses a 3nm process, totaling 18,000 computing cores, paired with a total of 31TB HBM4 video memory and a total bandwidth of 43TB/s, providing 2.9 Exaflops of FP8 computing power.

AMD also emphasized that Helios is an open rack platform leading to Yotta-level computing expansion.

Edge AI is Essential

Lisa Su made a clear judgment on AI PCs at the launch event: AI PCs are not a replacement for cloud AI, but rather the infrastructure for the next generation of personal computing.

AMD officially launched the Ryzen AI 400 series processors at this event. This series uses the Zen 5 CPU architecture and RDNA 3.5 GPU, integrating up to 60 TOPS of NPU computing power, and has fully supported the Windows Copilot+ ecosystem.

AMD also officially released the Ryzen AI Max platform aimed at high-performance developers and creators. According to official introductions, Ryzen AI Max is not a routine mobile upgrade, but rather AMD's redefinition of the "local AI computing unit" form.

The CPU is equipped with up to 16 cores/32 threads of Zen 5 architecture, the GPU integrates 40 RDNA 3.5 computing units, NPU computing power reaches 50 TOPS, and it is equipped with 128GB of unified memory. This configuration not only supports multimodal AI inference and generation but can also handle high-load tasks such as compilation, rendering, and data preprocessing.

The World's Smallest AI Development System

AMD also showcased the Ryzen AI Halo. In form, it resembles a mini host, significantly smaller than traditional workstations, and AMD refers to it as "the world's smallest AI development system."

This device is built on the flagship Ryzen AI Max processor, featuring a unified memory design, and can be configured with up to 128GB of memory to meet the dual demands of capacity and bandwidth when running large models locally AMD emphasized on-site that this is not a showcase hardware, but a ready-to-use local AI platform. The platform comes pre-installed with multiple mainstream open-source models, including GPT-OSS, FLUX.2, Stable Diffusion XL (SDXL), etc. Developers can complete model inference, debugging, and application validation locally without complex configurations. Its target users are not ordinary consumers, but developers, researchers, and small creative teams.

The following is the full text of the speech, translated with AI assistance.

Opening Remarks

At the 2026 International Consumer Electronics Show (CES), innovators from around the world gather to attend this most influential technology event globally. Together, we will envision the future and turn it into reality—tackling the most pressing challenges of our time and proving to the world that technology not only propels us forward but also creates new possibilities.

Here, artificial intelligence is not just a concept but a truly actionable intelligent force. Innovators, storytellers, and changemakers come together to reshape the landscape of content, creativity, and culture.

Pioneers will elevate the healthcare sector to unprecedented heights: from smart interconnected roads to autonomous flying vehicles, from next-generation ocean technologies to innovative solutions that nourish the globe—this is the driving force that propels us forward at CES.

Technology is not just about computation; it is about collaboration. Thinkers in the quantum realm, cybersecurity leaders, fintech visionaries, and robotic engineers are rewriting the rules of business operations. Because when the world's most resolute minds come together, we are not just predicting the future; we are actively creating it. This is a place where bold ideas intersect with global power, a core stage for industry integration, partnership, and breakthroughs.

Innovation has never been a solitary endeavor; it is a collective pursuit. At CES 2026, innovators gather for a shared vision, injecting momentum into technological transformation.

Gary Shapiro, President of the Consumer Technology Association (CTA)

Thank you very much! Good evening, everyone, and welcome to Las Vegas for the first keynote address of the 2026 International Consumer Electronics Show (CES).

Every year, CES allows us to witness up close the ideas and breakthroughs that will shape the next decade, but what truly defines these moments are the people behind the breakthroughs—those leaders who dare to challenge the limits.

Tonight, we are kicking off the exciting chapter of CES 2026 in advance, inviting such a leading figure. As we all know, CES has always been a platform for bold visions and ambitions; it is the cradle where ideas grow into industries and the starting point of a new era of human progress.

Today, artificial intelligence is accelerating across various industries, and we need to listen more than ever to the voices of those who are building the future systems and breakthroughs—they will define our future. Therefore, I am incredibly honored to introduce a leader whose vision and influence span the entire technology field

Dr. Lisa Su is no stranger to this stage; she has delivered keynote speeches at CES multiple times, each time setting the tone for the industry and the year ahead.

Since becoming CEO in 2014, Lisa has led AMD through one of the most extraordinary transformations in modern technology history. Under her leadership, AMD has reinvented itself through relentless innovation in high-performance computing and AI computing, with its products now powering AI training and inference, scientific research, enterprise workloads, cloud infrastructure, and the devices and experiences relied upon by millions daily.

Today, AMD has become a core force in the global AI transformation, with its CPU, GPU, and adaptive computing solutions unlocking new capabilities across cloud, enterprise, edge, and personal computing. While the industry has been discussing the potential of AI for years, Lisa has remained focused on building the computing foundation that makes AI scalable and accessible.

What truly sets Lisa apart is her leadership: a blend of analytical thinking and deep technical expertise, while always staying true to her original intentions. She uniquely combines scientific talent, strategic vision, and a human-centered approach, firmly believing in creating truly valuable solutions through deep collaboration—those that can drive societal progress, strengthen industry capabilities, and expand development opportunities. This is the kind of leadership that CES strives to promote and is essential for moving forward in an accelerating world of innovation.

Tonight, Lisa will share AMD's vision: how high-performance computing and advanced AI architectures will transform every aspect of our digital and physical worlds, from research and healthcare to space exploration, education, and productivity. Breakthroughs in AI are happening at an unprecedented pace, bringing both opportunities and responsibilities.

Transition Introduction (Exhibition Host)

Welcome to this unique moment in human history—a moment that allows the possible to transcend the impossible.

Here, every game you play can be played by your rules; AI is not only dedicated to shaping the future of cities but also helping our children remember what cities once looked like; in times of despair, AI-generated genomic medical solutions bring hope; no driver can have a more acute reaction than AI, and no signal can hinder you from sharing your thoughts.

AI is helping design renewable energy that rivals peak power, making transatlantic travel as easy as a short flight. But even as everything changes in an instant, one thing remains constant: we are tirelessly working to ensure that the most advanced AI capabilities ultimately fall into the right hands—yours.

Ladies and gentlemen, it is my honor to invite to the stage this globally respected technology expert, the defining CEO of the industry, and a leader who continues to shape the trajectory of modern computing development. Let us welcome AMD Chair and CEO—Dr. Lisa Su! (Audience cheers)

AMD Chairman and CEO Dr. Lisa Su

Wow, the atmosphere here is amazing! How is everyone doing tonight? (Cheers from the audience) Sounds great.

First, I want to thank Gary Shapiro for the introduction and welcome everyone who is here in Las Vegas and joining us online. It is an incredible honor to kick off CES 2026 with all of you.

Every year, I look forward to coming to CES to see all the latest and greatest technology products and to gather with many friends and partners. But this year, I am especially honored to open CES with all of you. We have a packed agenda for you tonight, and as you might guess, the core theme is AI.

The pace of AI innovation over the past few years has been incredible, but my theme tonight is — you haven't seen the real power yet! (Cheers from the audience) We are just beginning to unlock the potential of AI.

Tonight, I will share many examples and invite top experts from industry giants and innovative startups to join us on stage. We are working together to bring AI to every corner and benefit everyone. So, let's start with AMD.

AMD's mission is to push the boundaries of high-performance computing and AI computing to help solve the world's most critical challenges.

Today, I am incredibly proud to say that AMD technology impacts the lives of billions of people every day — from the largest cloud data centers to the fastest supercomputers in the world, from 5G networks, transportation, to gaming and entertainment, AI is fundamentally changing every corner of these fields.

AI is the most important technology of the past 50 years and is AMD's absolute top priority. It has touched every major industry, whether in healthcare, scientific research, manufacturing, or business, and we have only just scratched the surface. In the coming years, AI will be everywhere, and most importantly, AI belongs to everyone.

It makes us smarter and more capable, allowing everyone to become a more efficient version of themselves. And AMD is building the computing foundation to make this future a reality for every business and every person.

Since the launch of ChatGPT a few years ago, I believe everyone remembers their first experience using it. We have witnessed the number of AI users grow from 1 million to over 1 billion active users today, an incredible growth rate — it took the internet decades to reach this milestone.

And our predictions are even more astonishing: as AI truly becomes an indispensable part of our lives (just like today's smartphones and the internet), the number of AI users will grow to over 5 billion.

The foundation of AI is computing power. With the explosive growth in the number of users, the demand for global computing infrastructure has also surged dramatically — from about 1 zettabyte per second in 2022 to over 100 zettabytes per second today, a 100-fold increase in just a few years But tonight everyone will hear a consensus: even so, our computing power is still far from meeting all potential demands. (On-site response)

Innovation has never stopped: AI models are becoming increasingly powerful, capable of thinking, reasoning, and making better decisions. When expanded to the level of intelligent agents, this capability will be further enhanced.

Therefore, to achieve "AI everywhere," we need to increase global computing power by another 100 times in the next few years, reaching 10 Yottaflops per second within the next five years.

Let me do a quick survey: how many people know what Yottaflops per second is?

Please raise your hand. (Pause) 1 Yottaflop per second is equivalent to 1 followed by 24 zeros, while 10 Yottaflops per second is 10,000 times the computing power of 2022. This is unprecedented in the history of computing, as no technology like AI has ever generated such enormous demand.

To achieve this goal, AI needs to be integrated into every computing platform. Tonight, we will cover all scenarios: continuously running, delivering intelligence globally through the cloud; personal computers that help us work more efficiently and achieve personalized experiences; and edge devices that empower machines to make real-time decisions in the real world.

AMD is the only company with a full range of computing engines. To realize this vision, we need to match the most suitable computing power for different workloads—whether it's GPU, CPU, NPU, or custom accelerators, we have it all, and each can be optimized for application scenarios, providing the best performance while achieving the highest cost-effectiveness.

Tonight, we will embark on a journey to explore the latest AI innovations across various fields, including cloud, personal computers, and healthcare. First, let's start with the cloud.

The cloud is the core battleground for training large AI models and a hub for delivering intelligence to billions of users in real-time.

For developers, the cloud provides instant access to vast computing resources and the latest tools, enabling rapid deployment and scaling as application scenarios explode. Most of the AI services we experience today run in the cloud—whether using ChatGPT, Gemini, or programming with CodeLlama, these powerful models rely on cloud support.

Today, AMD is powering AI at every level of the cloud: all major cloud service providers are using AMD EPYC CPUs; eight of the top ten AI companies globally use AMD Instinct accelerators to support their cutting-edge models. And the demand for computing power continues to soar; let me show you some data:

In the past decade, the computing power required to train top AI models has grown more than fourfold each year, and this trend continues—this is also why today's models are becoming smarter and more practical. Meanwhile, with the increase in AI users, inference demand has exploded in the past two years, with token processing volume increasing by 100 times, truly marking a turning point. **

To keep up with this computational demand, the entire ecosystem needs to work together. We often say that the real challenge is how to build Yotta-scale AI infrastructure — this requires not only raw performance but also:

Deep integration of leading computing cores (CPU, GPU) and networking technologies;

An open modular rack design that can continuously evolve with product iterations;

High-speed networks that connect thousands of accelerators into a unified system;

An extremely simple deployment experience, which means a turnkey solution.

This is precisely why we are building Helios — the next-generation rack platform designed for the Yotta-scale AI era. Helios achieves innovation at every level of hardware, software, and systems:

First, our engineering team has designed the next-generation Instinct MI455 accelerator, achieving the largest generational performance improvement in history. The MI455 GPU uses leading 2nm and 3nm process technology, combined with advanced 3D Triplet packaging technology and ultra-fast HBM memory, integrated with AMD EPYC CPUs and Pensando network chips in a single Computer Tray, forming a tightly integrated unit.

Each tray is connected via a high-speed Ethernet-based Ultra Accelerator Link protocol, allowing the 72 GPUs in the rack to operate as a unified computing unit; subsequently, thousands of Helios racks are connected through industry-standard Ultra Ethernet and Pensando programmable networking technology, forming a powerful AI cluster that further enhances AI performance by offloading some tasks from the GPUs.

Now, since we are at CES, there has to be a "seeing is believing" demonstration. I am proud to present Helios — the world's most powerful AI rack, right here in Las Vegas! (Cheers from the audience) Isn't it impressive?

For those who have never seen such a rack, I must say, Helios is definitely the "behemoth of racks." It is not an ordinary rack but a double-wide rack designed based on the OCP Open Rack Wide standard developed in collaboration with Meta, weighing nearly 7,000 pounds (about 3,175 kilograms) — Gary, you know it took us quite a bit of effort to get it on stage. (Laughter) Its weight is equivalent to two compact cars, and this is the core power supporting all AI applications.

The design of Helios was closely collaborated with core customers, optimizing maintainability, manufacturability, and reliability to fit the next-generation AI data center. The core of Helios is the computing tray, so let's take a closer look at what it looks like (live demonstration). I must say, I can't lift this computing tray, so I'll have to ask the staff to help demonstrate it first Each Helios computing tray contains 4 MI455 GPUs, paired with the next-generation EPYC "Venice" CPU and Pensando network chips, all designed with liquid cooling to maximize performance. At the core of Helios is our next-generation Instinct GPU—now, I am very excited to showcase the MI455 X for the first time! ( Cheers from the audience)

The MI455 is our most advanced chip ever, with a substantial size: it contains 320 billion transistors, 70% more than the MI355; it integrates 12 computing and I/O core groups (Triplet) built on 2nm and 3nm processes, equipped with 432GB of ultra-fast HBM4 memory, all connected through next-generation 3D chip stacking technology.

Driving these GPUs is our next-generation EPYC CPU, codenamed "Venice"—also making its debut now! (Cheers from the audience)

This is yet another stunning chip, and I truly love our products. (Laughs) It uses a 2nm process technology and can support up to 256 of the latest high-performance Zen 6 cores.

The key is that we designed Venice specifically for AI scenarios, doubling the memory and GPU bandwidth compared to the previous generation, ensuring that even at rack scale, Venice can deliver data to the MI455 at full speed—this is the value of collaborative design.

We integrate all components through 800G Ethernet, Pensando Volcano, and Selena network technology, achieving ultra-high bandwidth and ultra-low latency, allowing tens of thousands of Helios racks to seamlessly scale within data centers.

To give everyone a tangible sense of its scale: each Helios rack contains over 18,000 CDNA 5 GPU compute units and more than 4,600 Zen CPU cores, with AI performance reaching up to 2.9 Exaflops; equipped with 31TB of HBM4 memory, it achieves industry-leading 260TB/s expansion bandwidth and 43TB/s aggregated expansion bandwidth, ensuring high-speed data flow.

In short, these numbers are truly impactful. Helios will be launched later this year, and the progress is fully on track; we believe it will set a new benchmark for AI performance.

To help everyone better understand this performance: just over 6 months ago, we launched the MI355, which improved inference throughput by 3 times compared to the previous generation; the MI455 takes this curve even higher, achieving up to 10 times performance improvement across various models and workloads—this is absolutely disruptive.

The MI455 enables developers to build larger models, more powerful intelligent agents, and applications, and in these areas, no one is more forward-looking than OpenAI. Next, to discuss the future of AI and our collaboration, I am very honored to invite OpenAI President and Co-founder Greg Brockman Step onto the stage! (Cheers from the audience)

Greg, I'm very glad you could come. OpenAI started all of this a few years ago with ChatGPT, and the progress has been incredible. We are extremely excited about the deep collaboration between us. Can you share with us the current progress, the trends you see, and the status of our collaboration?

OpenAI President and Co-founder Greg Brockman

Thank you very much for the invitation, I'm happy to be here.

ChatGPT seems to have "become famous overnight," but it is actually the result of seven years of hard work. We founded OpenAI back in 2015 with the vision of achieving Artificial General Intelligence (AGI) through deep learning, creating powerful systems that can benefit all of humanity — we hope to bring this technology to fruition and democratize its access.

Over the years, we have made steady progress step by step, with benchmark test scores improving year by year, but it wasn't until the launch of ChatGPT that we truly created a practical tool that countless people around the world are willing to use. The creative ways people use it have amazed us. I'm curious, how many people here are ChatGPT users? (Almost everyone in the audience raises their hands)

That's great, thank you all. But more importantly, how many people have gained experiences through it that are crucial to their own or their loved ones' lives — whether in healthcare, helping newborns, or other significant life scenarios? For me, that is the core metric we want to optimize.

Since 2025, there has been a real transformation: we have evolved from a simple "question-answer" text box into a tool that people use to handle important personal matters. This is evident not only in personalized healthcare but also deeply integrated into business scenarios — for example, transforming software engineering through the Codex model. I believe that this year, enterprise-level intelligent agents will truly explode.

Scientific discoveries are also being accelerated: a few months ago, we witnessed AI assisting in the development of a new mathematical proof for the first time, and this progress is ongoing. In all areas where human intelligence is required, as long as it can leverage and amplify human wisdom, AI can become an assistant, tool, and advisor, empowering people to achieve more possibilities.

AMD Chair and CEO Dr. Lisa Su

I completely agree with your view, Greg. We are indeed seeing the rapid expansion of this technology's applications. However, I have to say, every time I see you, you keep saying "we need more computing power," it's like a broken record. (Laughter) Can you share with us the bottlenecks you see in infrastructure and what directions the industry should focus on?

OpenAI President and Co-founder Greg Brockman

The core issue is "why do we need more computing power." In 2015, 2016, and 2017, the model capabilities were still very limited; we only needed to train and evaluate models to complete some specific narrow task scenarios But as models achieve exponential progress, their practical value also grows exponentially, and people hope to scale them into their lives.

The current trend is shifting from "question - answer" to "agenda-driven workflows": you let the model help you write software, and it will run independently for minutes, hours, or soon even days; you are no longer just operating a single intelligent agent, but a cluster of agents — for example, a developer can simultaneously have 10 different workflows advancing in parallel.

In the future, when you wake up in the morning, ChatGPT will have already helped you handle some household and work to-do items — this is the future we aim to create. And all of this requires the "global demand for computing power" you mentioned earlier — we need much more computing power than we currently have.

I hope there is a GPU running behind every person in the world because I believe it can create value for everyone, but currently, no one can plan for such a large-scale computing infrastructure.

The real value lies in improving people's lives. For example, during the holiday period, there was a case where ChatGPT saved a life: one of my colleagues, whose husband had leg pain, went to the emergency room for an X-ray, and the doctor diagnosed it as a muscle strain, telling him to go home and rest. But the pain became increasingly severe, and she input the symptoms into ChatGPT, which prompted, "Return to the emergency room immediately, it could be a blood clot." As a result, he was diagnosed with a deep vein thrombosis in his leg, accompanied by two pulmonary embolisms — if they had followed the doctor's advice to wait, the consequences could have been fatal.

Such stories are not uncommon. The CEO of the application department I work closely with every day, ChatGPT literally saved her life: she was hospitalized for kidney stones and developed an infection, and the doctor was about to inject an antibiotic when she suddenly called a halt and used ChatGPT to check if the antibiotic was suitable for her.

ChatGPT responded based on her complete medical history, "Not recommended" — because she had another infection two years ago, using that antibiotic could pose a fatal risk. She showed the result to the doctor, who was surprised and said, "I didn't know you had this medical history; I only had 5 minutes to review your medical records."

AMD Chairman and CEO Dr. Lisa Su

I completely agree, Greg. We all need such a "helper." (Audience reaction)

You vividly illustrated why we need more computing power and the value that AI can achieve — AMD deeply resonates with this. Our engineering team has also engaged in in-depth collaboration with you, and many designs of MI455 and Helios stem from close communication between our teams. Can you share your infrastructure needs, customer expectations, and how you use MI455?

OpenAI President and Co-founder Greg Brockman

The evolution of AI is fundamentally about balancing various resources on GPUs. We have a slide that showcases the evolution of resource balance across different MI series products — to be honest, this slide wasn't created by me but was generated by ChatGPT.

It reviewed a vast amount of AMD materials, created charts, titles, and subtitles, not only providing answers but also generating presentable results — this is exactly what ChatGPT can do today.

We are moving towards a world where "intelligent agents do everything," which requires deep adaptation of hardware to application scenarios.

Our vision is that human attention and intent will become the most valuable resources, thus scenarios involving human interaction need extremely low latency, while simultaneously requiring massive, continuously operating high-throughput intelligent agent computing power. These two different scenarios pose diverse demands on hardware manufacturers like AMD, and we are very honored to collaborate with you.

AMD Chairwoman and CEO Dr. Lisa Su

We are happy to create products that meet your needs. (Audience response) So, Greg, let's talk about the future.

Some people may wonder, "Is there really a demand for AI computing? Do we really need so much AI computing power?" I know you and I agree on this — people may not be able to imagine the future you see. Can you paint a picture of the world a few years from now?

OpenAI President and Co-founder Greg Brockman

Looking back over the past few years, our computing power has tripled every year, and revenue has grown in tandem.

However, within OpenAI, every time we want to release new features or models and bring technology to the world, we face a "computing resource competition" — because we want to launch too many things, and computing power becomes a bottleneck, preventing many ideas from being realized.

I believe that in the future, the GDP growth of a country or region will be directly driven by the computing power it possesses. We have already seen early signs, and this trend will become increasingly evident in the coming years.

AI can not only benefit local communities through data centers, but its technology will also become a core driving force for improving quality of life — scientific progress is fundamentally about enhancing quality of life. In every specific field, we can see the limitations of traditional models: a certain discipline has accumulated a vast amount of expertise, but the number of experts is limited, making it difficult to pass on knowledge to the next generation.

For example, in the field of biology, we will connect GPT-5 with wet lab equipment, where humans only need to describe the laboratory environment, and the model will propose multiple experimental plans for humans to test — as a result, the efficiency of a specific protocol improved by 79 times, even approaching 100 times. And this is just a reaction that people had not previously invested much effort in optimizing, because there is so much unexplored space in the field of biology, and no human can be an expert in all subfields

In the future, AI will break down the barriers between human disciplines — this trend is already evident in the healthcare sector: the more human knowledge increases and the higher the degree of specialization, the more AI will enable cross-domain empowerment.

Whether in corporate scenarios (where every application will be equipped with intelligent agents to accelerate human work) or in the most daunting challenges faced by humanity, AI will play a key role. The most difficult task for humanity will be how to utilize limited resources to create maximum value for everyone.

AMD Chairman and CEO Dr. Lisa Su

This is an incredible vision, Greg. It is a great honor to collaborate with you, and we undoubtedly have the power to change human lives. Thank you for your partnership, and I look forward to more breakthroughs in the future. Thank you! (Applause)

As Greg mentioned, computing power is at the core, and the MI series products are driving transformation. The MI400 series provides a complete solution for cloud, enterprise, supercomputing, and sovereign AI scenarios: Helios is designed for cutting-edge performance needs, suitable for large-scale training and rack-level distributed inference; for enterprise AI deployment, we launched the Instinct MI440X GPU, which offers leading training and inference performance in a compact 8 GPU server, compatible with existing data center infrastructure; for sovereign AI and supercomputing scenarios that require extremely high precision, the MI430X platform provides leading hybrid computing capabilities, supporting both high-precision scientific computing and AI data types.

This is precisely what makes AMD unique: with our core group (Triplet) technology, we can match the most suitable computing power for different application scenarios. But hardware is only part of the story; we firmly believe that an open ecosystem is key to the future of AI — history has repeatedly shown that when the industry collaborates around open infrastructure and shared technology standards, the speed of innovation significantly increases.

AMD is the only company that has achieved openness across the entire stack (hardware, software, and a broad solution ecosystem).

Our software strategy centers around Rockham — the industry's highest-performing open AI software stack, providing "zero-day support" for the most widely used frameworks, tools, and model centers, and receiving native support from top open-source projects like PyTorch, VLLM, LangChain, and Hugging Face (which collectively have over 100 million downloads per month), making it easy for developers to build, deploy, and scale AI applications on the AMD platform right out of the box.

An exciting AI company is using AMD and Rockham to power its models, and that is Luma AI. Next, let’s welcome Luma AI's CEO and co-founder Ahmed Zayed to the stage! (Applause)

Ahmed, welcome. You have done amazing work in the field of video generation and multimodal models. Can you share with us the mission of Luma and what you are currently working on?

Luma AI CEO and Co-founder Ahmed Zayed

Thank you very much for the invitation, Dr. Lisa Su. It is an honor to be here. Luma's mission is to build multimodal general intelligence that allows AI to understand our world and help us simulate and optimize it.

Today's AI video and image models are still in a very early stage, primarily used for generating pixels and creating beautiful images, but what the world needs are smarter models that can integrate audio, video, language, and images.

Therefore, Luma is training systems that can simulate physical laws and causal relationships, autonomously retrieve tools, conduct research, and present results in suitable forms such as audio, video, images, and text. In short, we are modeling and generating the "world."

For example, I would like to show you the results of our latest model—Ray 3, which is the world's first video model with reasoning capabilities. It can "think" about pixels and potential features first, judging whether the content to be generated is reasonable; at the same time, it is also the world's first model to support 4K and HDR generation. Please enjoy. (Demonstration video plays on-site)

AMD Chair and CEO Dr. Lisa Su

That's incredible! Can you share how customers are currently using Ray 3?

Luma AI CEO and Co-founder Ahmed Zayed

Our partners include large enterprises and individual creators across all industries that require "storytelling," such as advertising, media, and entertainment. By 2025, customers began deploying and trialing our models, and by the end of the year, large-scale applications had emerged—some even used it to create a 90-minute feature film.

As usage deepens, the core demand from customers is "control and precision"—how to accurately present their creative ideas on screen? Our research found that the sense of control comes from intelligence, not better prompts—you cannot meet all needs by repeatedly modifying prompts. Therefore, we developed a new model, Ray 3 Modify, based on Ray 3, allowing users to "edit the world."

Let me show you its effects (demonstration video plays on-site)—this segment has no audio, so I will explain: what is being demonstrated on the screen is Ray 3's world editing capability. It can receive any real footage or AI-generated material and modify it to any extent according to your creative needs.

This is a powerful system we built for the most ambitious and demanding customers, widely used in entertainment, advertising, and other fields, opening a new era of human-machine collaborative production—humans use actions, rhythms, and directions as "prompts," and the model transforms them into final results

This means that filmmakers and creators can now build complete movie universes without complex scenes, and can edit and modify at any time until they achieve the desired effect — something that was completely impossible before. In 2026, we will take it a step further: AI will be able to help you complete more tasks, even end-to-end complete tasks.

Our team is working hard to create the world's most powerful multimodal intelligent agent model. Using Luma's model, you will feel like you have a large elite creative team assisting you in realizing your ideas. I want to show you a brief demonstration (live playback of the demo video): this is a brand new multimodal intelligent agent that can receive a complete script containing characters and ideas and present it to you in real-time.

This is not a simple "script to movie" conversion, but rather human-computer interaction — our next-generation model can analyze multiple frames, long videos, make precise selections, while maintaining the coherence of characters, scenes, and stories, editing only when necessary. What you see is the collaboration between humans and AI in designing characters, environments, shots, and the entire world.

With these intelligent agents, creators will be able to independently complete complete stories that previously required large production teams — something that was unimaginable before. We have already applied this extensively internally and are incredibly excited about it. In the future, individual creators or small teams will have creative capabilities comparable to entire Hollywood studios.

AMD Chairman and CEO Dr. Lisa Su

Incredible! I'm so glad to see Luma's intelligent agents achieving such results. I know you have many options on computing platforms; I remember when we first communicated, you reached out to me saying "we need computing power," and I felt that AMD could help. Can you share why you chose AMD and how the collaboration experience has been?

Luma AI CEO and Co-founder Ahmed Zayed

Yes, we bet on AMD early on — that conversation was in early 2024, and since then, our collaboration has developed into large-scale synergy between teams. Today, 60% of Luma's rapidly growing inference workload runs on AMD chips.

Initially, we needed to do a lot of engineering adaptations, but now, almost all workloads we can think of can run out of the box on the AMD platform — thanks to the tireless efforts of your software team and the improvement of the Rockham ecosystem.

The multimodal model we are building has workloads that are much more complex than text models: for example, a 10-second video corresponds to as many as 100,000 tokens, while an LLM's response typically only has 200-300 tokens. Handling such vast amounts of information, total cost of ownership (TCO) and inference economics are crucial to our business, otherwise we cannot meet the growing demand

Through collaboration with the AMD team, we have achieved the best TCO performance in history. We believe that as we build more complex models (supporting autoregressive diffusion and simultaneously handling text, images, audio, and video), this collaboration will give us a significant advantage in cost and efficiency — which is crucial for the industry.

Therefore, we are confident in the collaboration and are scaling it up to ten times the previous size, with the MI455 graphics card at its core. I am incredibly excited about the MI455 X; the rack-level solutions, memory, and infrastructure you are building are essential for us to construct these complex simulation models.

AMD Chair and CEO Dr. Lisa Su

We are very happy to hear this, Ahmed. AMD's goal is to provide more powerful hardware, while your goal is to create incredible results with that hardware. Can you give us a brief outlook on what customers will be able to do in the coming years that is currently completely impossible?

Luma AI CEO and Co-founder Ahmed Zayed

As Greg mentioned earlier, LLMs were mainly used for writing and drafting short emails in 2022 and 2023, but at that time, no one could have imagined they would be applied to real-time systems, healthcare, and other fields — thanks to improvements in accuracy, architecture, and scalability.

Today's video models are in a similar early stage, primarily used for generating videos and beautiful images, but as model scalability, accuracy, and data quality improve, they will be able to simulate physical processes in the real world: such as CAD architectural design, fluid dynamics simulation, rocket engine design, urban planning, etc. These simulations, which currently require large teams to complete manually, will be highly automated by AI in the future.

As model accuracy continues to improve, multimodal models will become the core of general robotic technology — your household robot will perform hundreds of simulations in its mind (through images and videos), thinking about "how to complete tasks" and "how to solve problems," with capabilities far exceeding current LLM and VLM-driven robots. This is exactly how the human brain works: humans are inherently multimodal, and our AI systems will be too in the future.

AMD Chair and CEO Dr. Lisa Su

This sounds incredibly exciting, Ahmed. Thank you very much for your sharing and collaboration today, and we look forward to more breakthroughs from you in the future. Thank you! (Cheers from the audience)

As Greg and Ahmed mentioned, they need more computing power to build and run the next generation of models — this is a common need for all our customers, which is why the demand for computing power is growing at an unprecedented rate. This means we need to continuously push the limits of performance and surpass current achievements.

The MI400 series is an important turning point, achieving leading training performance across all workloads (training, inference, scientific computing), but we will not stop there: the development of the next generation MI500 series is already in full swing

The MI500, based on the next-generation CDNA 6 architecture, utilizes a 2nm process technology and features faster HBM memory, set to launch in 2027. By then, we will achieve a 1000-fold increase in AI performance over the past four years, making more powerful AI accessible to everyone. (Cheers from the audience)

Next, let’s shift our focus from the cloud to devices that make AI more personalized—personal computers (PCs).

For decades, PCs have been powerful tools that help us enhance productivity, whether for work or study. But with AI, PCs are no longer just tools; they have become indispensable partners in our lives—they can learn your work habits, adapt to your preferences, and even help you complete tasks at unimaginable speeds, even when offline.

AI PCs have already begun to unleash real value in various everyday tasks, from content creation and productivity enhancement to intelligent personal assistants. Let’s take a look at some application cases of AI PCs today: first, content creation—these videos were generated on the Ryzen AI Max PC using simple text prompts, without relying on the cloud, allowing anyone to create professional-level images and videos in minutes, without any design experience.

Microsoft is a key enabler of AI PCs, integrating next-generation capabilities directly into productivity tools: such as meeting management, quick email summaries, real-time document searches, and real-time translation for video conferences. Through Microsoft Copilot, advanced AI capabilities are deeply integrated into the Windows experience, allowing you to simply describe your needs, and the PC will automatically complete the tasks.

AMD foresaw the wave of AI PCs early on and made preparations in advance, which is why we can lead at every turning point: in 2023, we were the first to integrate dedicated AI engines into chips; in 2024, we will be the first to launch x86 PCs that support Copilot+; with Ryzen AI Max, we created the first single-chip x86 platform capable of running 200 billion parameter models locally. Today, we continue to maintain our leading position with the new generation of Ryzen AI laptops and desktop processors.

Today, I am proud to announce the all-new Ryzen AI 400 series—the most comprehensive and advanced family of AI PC processors in the industry! The Ryzen AI 400 integrates up to 12 high-performance Zen 5 CPU cores, 16 RDNA 3.5 GPU cores, and the latest XDNA 2 NPU, with AI computing performance reaching up to 60 TOPS, while supporting faster memory speeds. This flagship mobile processor significantly outperforms competitors in content creation and multitasking performance.

The market response to the Ryzen AI 400 series has been enthusiastic—if you visit CES this week, you will see many new laptops featuring this processor. The first batch of Ryzen AI 400 series PCs will begin shipping later this month, with over 120 ultra-thin laptops, gaming laptops, and commercial PCs set to launch throughout the year, covering all AI PC forms From major mainstream OEM manufacturers.

Driving the next generation of AI PC experiences requires not only powerful hardware but also smarter software—models that are lighter, faster, and capable of running directly on devices, which is fundamentally different from cloud-based models.

To delve into the next wave of model innovation, let's give a warm welcome to Ramin Hasani, co-founder and CEO of Liquid AI! (Audience cheers)

Ramin, it's great to have you here. I'm very much looking forward to the work Liquid AI is doing, as you have taken a completely different approach to model development. Can you share with the audience what Liquid AI is doing and how it differs from other companies?

Ramin Hasani, Co-founder and CEO of Liquid AI

Thank you very much for the invitation, Dr. Lisa Su. I'm happy to be here. Liquid AI is a foundational model company that spun out from MIT two and a half years ago, and we are building efficient general models that can run quickly on any processor, both inside and outside of data centers.

We adopt a "hardware-in-the-loop" approach, designing multimodal models from the ground up that can optimize neural network architectures for specific hardware—we do not build Transformer models; instead, we create Liquid foundational models: powerful, fast, and processor-optimized generative models.

Our goal is to fundamentally reduce the cost of intelligent computing without sacrificing quality. This means that Liquid models can deliver cutting-edge model performance directly on devices—whether it's a smartphone, laptop, robot, coffee machine, or airplane, anywhere there is computing power, it can run. Its core value lies in three points: privacy protection, speed, and continuity, seamlessly adapting to both online and offline workloads.

Dr. Lisa Su, Chair and CEO of AMD

Ramin, our teams have been working closely together to bring more powerful models to AI PCs. Can you share some progress in this area?

Ramin Hasani, Co-founder and CEO of Liquid AI

Of course. Today I have two new products to announce: first, we are very excited to launch Liquid Foundation Models 2—this is the most advanced "lightweight model" on the market, containing only 1.2 billion parameters, but its instruction-following capability outperforms other models in the same class, even surpassing some larger models (like DeepSeek and Gemini Pro).

The various instances of Liquid Foundation Models 2 are core modules for building reliable AI agents on any device. We will release five model instances: a chat model, an instruction model, a Japanese-enhanced language model, a visual language model, and a lightweight audio language model

These models are highly optimized for AMD Ryzen AI's CPU, GPU, and NPU, and are now available for download on Hugging Face and our own platform, Leap, for everyone to experience at any time.

Secondly, we can combine instances of these Liquid Foundation Models 2 to build intelligent agent workflows. However, integrating all modalities into a single model would provide an even more amazing experience — this is my second announcement: Liquid 3 (Elephant Tree).

It is a native multimodal model capable of processing text, visual, and audio inputs, and outputs in audio and text formats, supporting 10 languages, with processing latency for audio and video data of less than 100 milliseconds. Liquid 3 will be launched later this year.

AMD Chair and CEO Dr. Lisa Su

That's great! Ramin, can you help explain to the audience why they should be excited about Liquid 3? What functionalities can these models achieve on AI PCs?

Liquid AI Co-founder and CEO Ramin Hasani

Of course. Most AI assistants and copilots today are "reactive agents" — they respond only when you open the app and ask a question. But when AI runs quickly on the device and is always online, it can proactively handle tasks for you, working silently in the background.

Let me show you a brief demonstration, which is a reference design aimed at inspiring everyone: what possibilities we can achieve on a PC equipped with Liquid models. Imagine you are a sales manager using an AMD Ryzen laptop with Liquid 3 to process spreadsheets, deeply focused. Notifications are constantly coming in, including a calendar reminder for a sales meeting, but you want to continue focusing on data processing — at this moment, Liquid's proactive intelligent agent recognizes the meeting reminder and proactively suggests "attending the meeting on your behalf." Once you agree, you can continue focusing on data analysis while the agent participates in the meeting on your behalf in the background.

AMD Chair and CEO Dr. Lisa Su

Can we really trust this agent? I'm a bit worried it might "mess things up." (laughs)

Liquid AI Co-founder and CEO Ramin Hasani

This system does not just transcribe meeting content; it truly understands the meeting process. It can also interact with your email platform, analyzing incoming emails in real-time and drafting replies for each email through deep research functionality — all operations are under your complete control, with no surprises

Everything runs offline on the device side, ensuring privacy. This system can provide better summarization and task processing capabilities than reactive agents. I believe this year will be the inaugural year of "proactive intelligent agents." I am very excited to announce that we are collaborating with Zoom to integrate these features into the Zoom platform.

AMD Chairman and CEO Dr. Lisa Su

So exciting! (Cheers from the audience) Ramin, you have shown everyone the potential of AI truly integrated into PCs. We are confident in our collaboration and look forward to more achievements in the future. Thank you!

Liquid AI Co-founder and CEO Ramin Hasani

Thank you, Dr. Su, and thank you for the invitation from AMD!

AMD Chairman and CEO Dr. Lisa Su

(Cheers from the audience) Everyone has seen the potential of local AI, but the latest PCs can not only run AI models but also "build" AI models — this is precisely the purpose of creating the Ryzen AI Max: to provide the ultimate PC processor for creators, gamers, and AI developers.

It is the world's most powerful AI PC platform, equipped with 16 high-performance Zen 5 CPU cores, 40 RDNA 3.5 GPU compute units, and XDNA 2 NPU, with AI performance reaching up to 50 TOPS. Through a unified memory architecture, it supports CPU, GPU, and NPU sharing up to 128GB of memory.

In high-end laptops, the Ryzen AI Max significantly outperforms the latest MacBook Pro in AI and content creation applications; in small workstations, the performance of the Ryzen AI Max is comparable to NVIDIA DGX Spark but at a much lower price — achieving up to 1.7 times the tokens per second performance for every dollar spent when running the latest GPT open-source models.

At the same time, the Ryzen AI Max natively supports Windows and Linux systems, allowing developers full access to their preferred software environments, tools, and workflows. There are already over 30 Ryzen AI Max devices on the market, with new laptops, all-in-ones, and compact workstations being launched at CES and continuing to be released throughout the year.

But AMD's mission is to "make AI ubiquitous and benefit everyone." We know that many AI developers present wish to have a platform that allows for "development at any time" — so we are taking it a step further. Today, I am excited to announce the AMD Ryzen AI Halo — a brand new local AI deployment reference platform! (Cheers from the audience)

Do you think it's stunning? Let me introduce it: this is the world's smallest AI development system, capable of running models with up to 200 billion parameters locally without connecting to any external devices. It is equipped with our top-of-the-line Ryzen AI Max processor and 128GB of high-speed unified memory (shared by CPU, GPU, and NPU), which greatly enhances system performance, allowing large AI models to run efficiently on a desktop PC the size of your palm. Thank you, everyone! (Cheers from the audience)

Halo natively supports multiple operating systems, comes pre-installed with the latest Rockham software stack and mainstream open-source developer tools, and hundreds of models are ready to use right out of the box — it provides developers with everything they need to build, test, and deploy local intelligent agents and AI applications. You might be curious about its launch time: Halo will be released in the second quarter of this year, and we can't wait for you to experience it firsthand.

Next, let's turn to the gaming and content creation field. (Cheers from the audience) I know there are many gamers present, right?

Every day, gamers and creators rely on AMD's products — from Ryzen and Radeon PCs, Threadripper workstations, to Sony and Microsoft's gaming consoles, AMD delivers billions of frames each year. Over the years, the visual quality of graphics has made tremendous progress, but the way 3D worlds are built has hardly changed: teams still need months or even years to create a 3D experience.

And AI is starting to change all of that. To showcase the future of 3D world creation, I am honored to invite one of the most influential figures in the AI field, the scientist known as the "Godmother of AI" — Dr. Fei-Fei Lee, co-founder and CEO of World Labs! Her research has fundamentally changed the way machines "see" and understand the world, so let's welcome her with applause! (Cheers from the audience)

Fei-Fei, I’m so glad you could come. You have been a leader in shaping AI development for decades; could you share your views on the current state of AI development and the motivation behind founding World Labs?

Dr. Fei-Fei Lee, Co-founder and CEO of World Labs

Thank you very much for the invitation, Su Zifeng, and congratulations on all the new products released by AMD; I can't wait to experience them! (Laughs) In the past few years, AI has indeed made remarkable breakthroughs. As you mentioned, I have been deeply involved in this field for over twenty years, and the current trends in AI development are the most exciting times for me.

In recent years, language-based AI technologies have swept the globe, with various capabilities and applications emerging. But in fact, human intelligence goes far beyond language — we are not just passive observers of the world; we are beings with exceptional spatial intelligence, possessing deep capabilities that connect perception and action

Think about it, everyone here today, whether navigating through the airport, heading from the hotel to the coffee shop, or finding the venue in the "maze" of Las Vegas, relies on spatial intelligence. What excites me is that generative AI technology has ushered in a new wave, whether it's embodied AI or generative AI, we can finally endow machines with spatial intelligence close to human levels—this capability allows machines not only to perceive the world but also to create 3D or even 4D worlds, reason about the relationships between objects and humans, and imagine entirely new environments that follow the laws of physics and principles of dynamics (whether virtual or real). This is precisely the purpose of founding World Labs: to bring spatial intelligence to life and create value for humanity.

AMD Chairwoman and CEO Dr. Lisa Su

I still remember the first time we talked about the concept of World Labs; I could feel your enthusiasm. Can you share with the audience what your model can do, so everyone can intuitively understand the significance of "spatial intelligence"?

World Labs Co-founder and CEO Dr. Fei-Fei Lee

I heard there are many gamers in the audience, which is so exciting! Traditionally, building 3D content requires laser scanners, calibrated cameras, or complex software for manual modeling. But at World Labs, we are creating a new generation of models that leverage the latest generative AI technology to learn the structure of the world directly from vast amounts of data—not just flat pixel structures, but 3D structures.

Provide the model with a few images, or even just one image, and it can fill in missing details, predict the scenes behind objects, and generate rich, coherent, persistent, and navigable 3D worlds. What you see on the screen now is the "Hobbit World" generated by our model Marble—we only gave it a few images, and it created these coherent 3D scenes that you can navigate and overlook; our system transformed a small amount of visual input into a vast 3D world that can be freely explored.

This demonstrates that our model can not only reconstruct environments but also "imagine" coherent, interconnected worlds—once these worlds are created, they can seamlessly connect, transitioning naturally from one environment to another and expanding to a larger scale. This is very similar to how humans can outline the entirety of a place with just a few glances.

AMD Chairwoman and CEO Dr. Lisa Su

Achieving such effects with so little input is truly amazing! Can you show us how this technology works?

World Labs Co-founder and CEO Dr. Fei-Fei Lee

Of course. Let's return from the "Hobbit World" to a real scene and choose a familiar place. During the holiday, our team visited the AMD Silicon Valley office — Dr. Lisa Su, I hope they got your permission? (laugh)

AMD Chairwoman and CEO Dr. Lisa Su

They didn't! (laugh)

World Labs Co-founder and CEO Dr. Fei-Fei Lee

But that's okay, now everyone can see the results. We just took a few photos with a regular smartphone camera, without any special equipment, and then input these photos into World Labs' generative 3D model Marble — with the help of the AMD Instinct MI325X chip and the Rockham software stack, the model created a 3D version of the environment, including the dimensions of windows, doors, and furniture, as well as depth and proportion.

Please remember, what you see is not a photo or video, but a truly coherent 3D world. Afterwards, our team felt it wasn't enough and decided to "remodel" it for free — everyone can see which style they like, and I personally really like the Egyptian style, maybe because I'm going to travel there in a few months. (laugh)

This transformation always maintains geometric coherence and the core features of the 3D input, so you can imagine it is a powerful tool in many scenarios: robot simulation, game development, design, etc. Work that traditionally takes months can now be completed in just a few minutes. We even did the same operation on the Venetian Hotel — just took photos yesterday, and after inputting them into the model, it turned the whole place into an imaginative 3D space.

I believe everyone can take a photo and send it to Marble to experience it personally. But what you don't see is the amount of computation in the background and the importance of inference speed — the faster the model runs, the more agile the world's response: instant feedback when the camera moves, immediate effect when editing, and the scene remains coherent as you explore, that's the key.

AMD Chairwoman and CEO Dr. Lisa Su

I think many people will definitely go to your website to try Marble! (laugh) But seriously, this technology is amazing. Can you share your experience of collaborating with AMD, the Instinct chips, and the Rockham software stack?

World Labs Co-founder and CEO Dr. Fei-Fei Lee

Of course. Although Dr. Su and I are old friends, our business collaboration is relatively new — to be honest, I am very surprised by the speed of the collaboration. Some of our models are real-time frame generation models, and it only took a week to adapt them to the AMD Instinct MI325X; afterwards, with the help of AMD Instinct and Rockham, our team increased performance by more than four times in just a few weeks, which is very impressive

This is crucial because spatial intelligence is fundamentally different from previous large language models (LLMs): understanding and navigating 3D structures, processing motion, and understanding physical laws require enormous memory, massive parallel computing power, and extremely fast reasoning speed. Seeing the new products you released, I can't wait to see further scaling of platforms like MI455 — they will enable us to train larger world models and, more importantly, bring these environments to "life," allowing for instant responses as users or intelligent agents move, explore, interact, and create.

AMD Chairwoman and CEO Dr. Lisa Su

This is so exciting. Fei-Fei, with the continuous improvement in computing performance and ongoing innovations in models, can you give the audience a glimpse of the developments in the coming years?

World Labs Co-founder and CEO Dr. Fei-Fei Lee

I know everyone knows me, and I don't like to exaggerate — I prefer to share facts. The world of the future will undergo tremendous changes, and many previously difficult workflows will be transformed by this amazing technology.

For example, creators can now sketch ideas in a "living world," experiencing and creating real scenes from their minds, exploring space, light, and motion — just like sketching in a real environment; intelligent agents (whether robots, vehicles, or tools) can learn in a highly realistic, physics-based digital world and then be deployed in the real world, making them safer, faster to develop, and more capable of assisting humans; designers (such as architects) can "walk into" their designs before construction begins, exploring forms, flows, and materials, rather than just viewing static aesthetic renderings.

What excites me the most is that this marks a shift in the role of AI in our lives: from passive systems that understand text and images to systems that not only understand but also help us interact with the world. Lisa, what we shared today — transforming several images into a coherent 3D world that can be exported in real-time — is no longer a glimpse of a distant future, but the beginning of a new era.

Of course, we also know that while AI technology is powerful, we have a responsibility to develop and deploy it in ways that reflect core human values: enhancing human creativity, productivity, and spirit of cooperation, always keeping humanity at the core, no matter how powerful the technology becomes. It is a great honor to embark on this journey with AMD and you.

AMD Chairwoman and CEO Dr. Lisa Su

Fei-Fei, I think I can speak for everyone when I say you are a true source of inspiration in the field of AI. Congratulations on all your achievements, and thank you for your sharing tonight! Thank you! (Audience cheers)

Next, let's turn to the healthcare sector. (The audience quiets down, focusing)

Introduction to Healthcare

Among all the ways AI is changing the world, its impact on healthcare is the most profound and closely related to each of us. AMD technology is making what was once deemed "impossible" a reality: supercomputers are enabling early cancer detection through large-scale data analysis; computational modeling simulates complex biological systems, allowing patients to receive treatment faster; molecular simulations accelerate the development of potential therapies; genomic research personalizes medical services; and robotic-assisted surgeries improve patient outcomes. Our partners are leveraging AI to accelerate scientific progress and enhance human health—all empowered by AMD technology.

Dr. Lisa Su, Chair and CEO of AMD

As you saw in the video, AMD technology is widely applied in the healthcare field, making it one of the most meaningful application scenarios. Tonight, you have heard some stories, and the combination of high-performance computing and AI is a field I am personally passionate about—how to empower healthcare with technology.

In our lives, nothing is more important than our own health and that of our loved ones. Improving healthcare outcomes with technology means that our progress should be measured by the "lives saved."

Tonight, I am honored to invite three experts who are leading the application of AI in real healthcare challenges. Let's give a warm welcome to AppSide CEO Sean McClean, Illumina CEO Jacob Dason, and AstraZeneca's Head of Molecular AI Ula Anxhi! (Audience cheers)

Thank you all for being here; the enthusiasm for healthcare is evident. Thank you for your deep collaboration. Sean, AppSide is using generative models and synthetic biology to design new drugs from scratch. Can you share with us the principles behind this?

Sean McClean, CEO of AppSide

Thank you for the invitation; I am very happy to be here. Biology is a complex and intricate discipline, and traditional drug discovery and development methods are very outdated, essentially a "trial and error" approach—like searching for a needle in a haystack. But with generative AI and AppSide's technology, we can proactively "create that needle": precisely designing the biological characteristics we want in drugs, targeting diseases with unmet medical needs while ensuring manufacturability and developability of the drugs.

Now, with the combination of AI and biology, we have achieved "precision engineering"—just as Apple designs the iPhone and AMD designs the MI455, we are "designing biology." The direct impact of this is that we can begin to tackle some of the most challenging and difficult diseases—those with significant medical needs but poor existing treatment options

At AppSide, this is precisely our core goal. The two areas we focus on include: angiogenesis-related diseases (such as the common issue of hair loss) — in the near future, we hope to cure hair loss through AI, isn't that exciting? (light laughter on site) Additionally, we are also focusing on a long-neglected area: women's health. For too long, women's health issues have been marginalized, and we are developing a drug for endometriosis (affecting one in ten women) that aims to provide a disease-modifying therapy.

This is exactly where AI adds value in drug discovery, and all of this is made possible through our computational collaboration with AMD. Lisa Su, you and Mark Papermaster invested in AppSide about a year ago, and in this year, our reasoning capabilities have scaled up, screening over 1 million drugs in a single day — this is incredible. Moreover, with the memory advantages of MI355, we are able to contextualize biological backgrounds in ways that were previously unattainable, ultimately creating better drug discovery models. The future of AI and drug discovery is bright.

AMD Chair and CEO Lisa Su

This is so exciting, Sean. Thank you for your collaboration, and we are looking forward to the work of both parties. Jacob, Illumina is a global leader in interpreting the human genome and improving health. How does AI assist your work? What impact does it have on the future of precision medicine?

Illumina CEO Jacob Dason

Thank you very much for the invitation, I am excited to be here. Like AMD, we are passionate about improving human health. I look forward to achieving more together in the future — of course, Sean, I am also very much looking forward to that hair loss drug. (laughs)

Let me briefly introduce Illumina: we are the global leader in DNA sequencing, and DNA, as everyone knows, is the blueprint of life, making each person unique.

Therefore, accurately measuring DNA is crucial for the prevention, diagnosis, and treatment of diseases. Simply put, the human genome contains 3 billion "letters," equivalent to a 200,000-page book, and every person's cells contain such a "book."

If there is a "spelling mistake" in this book, it could mean the difference between a long, healthy life and a short, painful one. Therefore, precise DNA sequencing is essential, but this process requires handling massive amounts of data and has extremely high computational demands — in fact, our sequencers generate more data daily than YouTube does. Thus, collaboration with AMD is crucial: our sequencers use AMD's FPGA and EPYC processors every day, which is the only way we can process all the data and turn it into insights

Over the past decade, our technology has played a role in drug discovery and has had a profound impact on the current healthcare field—used for cancer typing, genetic disease diagnosis, etc., helping to save millions of lives. But we are just getting started; the complexity of biology far exceeds the understanding capacity of the human brain. The combination of generative AI with genomics and proteomics will fundamentally change our understanding of biology.

In the coming years, this will not only affect drug discovery but will also change the way we prevent and treat diseases early—truly transforming our understanding of longevity and healthy living. All of this requires collaboration among us, everyone present, and the entire ecosystem, and I am incredibly excited about it.

AMD Chair and CEO Dr. Lisa Su

That's fantastic, Jacob. Ula, AstraZeneca is scaling AI in the world's largest drug discovery pipeline. Can you share how AI is changing the way you develop new drugs?

AstraZeneca Molecular AI Lead Ula Anxhi

Thank you, Lisa, and thanks to CES for the invitation. AstraZeneca is fully applying AI across all aspects from early drug discovery, manufacturing to healthcare service delivery. For us, it's not just about productivity; it's about innovation—how to work differently and achieve unprecedented things with AI.

One of the areas I am personally most passionate about is how to deliver drug candidates faster with generative AI.

Our approach is to train generative AI models on decades of accumulated experimental data and then use these models to virtually evaluate which hypotheses and drug candidates might be effective. We can assess millions of potential drug candidates in a short time and only bring those we believe have real potential into the experimental lab to validate the hypotheses.

In simple terms, we use generative AI models to generate, modify, and optimize drug candidates, significantly reducing the number of laboratory experiments. This new way of working is being promoted across AstraZeneca's entire small molecule drug pipeline, and we have found that the speed of delivering drug candidates has increased by 50%, and the subsequent clinical success rate has also improved.

None of this would be possible without collaboration—we need to work together with academia, AI startups, and large companies like AMD. For us, ultra-scale computing is crucial: we have vast amounts of high-quality data and hope to build the optimal model. Our collaboration with AMD has enabled us to scale drug discovery, handle these massive new datasets, and optimize the entire process with AI—it's incredibly exciting.

AMD Chair and CEO Dr. Lisa Su

Your stories are all so inspiring, and it's a great honor to work with you to turn these visions into reality. Finally, let's summarize: what is the one thing each of you is most looking forward to regarding how AI will improve healthcare? Jacob, you go first

Illumina CEO Jacob Dason

For me, the most exciting thing is that this is the first time we have the technology to generate massive amounts of data, sufficient computing power, and generative AI models that can truly change our understanding of biology — all of which will translate into significant advancements in the healthcare field.

AstraZeneca Molecular AI Head Ula Anxhi

I believe that AI will fundamentally change our understanding of biology, allowing us to move from "treating diseases" to "preventing chronic diseases" — this is the ambition that the entire industry should uphold.

AMD Chair and CEO Dr. Lisa Su

That's fantastic. Sean, why don't you wrap it up.

AppSide CEO Sean McClean

Of course. Continuing Ula's point, I hope to live in a world where we can proactively intervene before people get sick, providing medications and treatment plans to keep them in a healthy metabolic state, with thick hair (light laughter in the audience), and the vitality we all crave.

We need to shift from "disease treatment" to "preventive healthcare," ultimately achieving "regenerative biology and regenerative medicine" — making aging no longer a linear process. This is the world that AI will help us create, and it is an exciting time.

AMD Chair and CEO Dr. Lisa Su

(Audience cheers) Sean, I think we are all inspired by your vision. As I understand it: AI will help us predict diseases, prevent diseases, provide personalized treatments, and ultimately extend human lifespan. You are at the forefront of this field, and it is a great honor to be your partner. Thank you all for sharing tonight, and I look forward to advancing this cutting-edge field together in the coming years. Thank you! (Audience cheers)

Next, let’s enter the world of "Physical AI" — this is the realm where AI enters the real world, powered by high-performance CPUs and leading adaptive computing, enabling machines to understand their surroundings, take action, and achieve complex goals.

At AMD, we have spent over twenty years building the foundation for Physical AI. Today, AMD processors power factory robots with micron-level precision, guide inspection systems in infrastructure construction processes, and support surgeries that are less invasive and recover faster — all achieved in collaboration with a broad ecosystem of partners.