Morgan Stanley's Heavyweight Robotics Yearbook (II): Robots "Escape the Factory," Training Focus Shifts from "Brain" to "Body," Edge Computing Expected to Explode

Morgan Stanley pointed out that AI robots are moving from factories to complex real-world scenarios, with the focus of training shifting from AI model optimization to physical action execution. Robots need to collect real physical data through remote operation, simulated training, and video learning. As application scenarios expand, the demand for real-time decision-making becomes prominent, making edge computing a necessity. The firm predicts that by 2050, 1.4 billion robots will be sold globally, driving the demand for edge AI computing power to reach the equivalent of millions of B200 chips, reshaping the distribution pattern of global computing infrastructure

Morgan Stanley recently pointed out that AI-driven robots are undergoing a historic shift from factory workshops to broader application scenarios, with training focus shifting from traditional cognitive abilities to physical manipulation skills. This change is expected to spur explosive growth in edge computing demand.

On December 15th, according to Hard AI news, Morgan Stanley noted in its latest report "Robot Yearbook (Volume II)" that the global robotics industry is experiencing two key transformations: First, robot application scenarios are "escaping" from factories to unstructured environments such as homes, cities, and space; second, the training focus is shifting from traditional AI "brains" (general models) to "bodies" (physical action control).

Morgan Stanley pointed out that this transformation will drive an explosion in edge computing demand, with real-time inference chips, simulation technology, and robotic sensors potentially becoming core investment themes. The report emphasizes that the complexity of the physical world (such as force control for grasping objects and dynamic environment navigation) is forcing the technological route to shift from "pure software optimization" to "hardware-software collaboration," and distributed edge computing may reshape the global computing infrastructure landscape.

Morgan Stanley predicts that by 2050, 1.4 billion robots will be sold globally, which will drive edge AI computing demand to reach the equivalent of millions of B200 chips, reshaping the distribution pattern of global computing infrastructure.

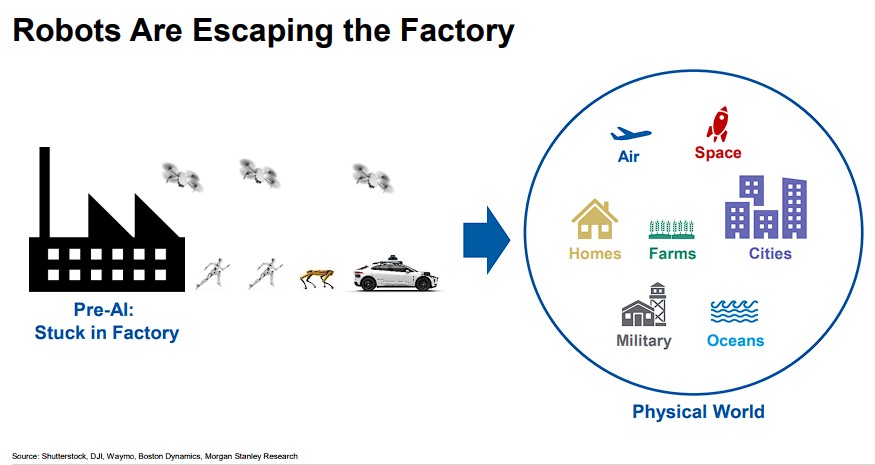

Robots "Escape the Factory": From Structured Cages to Complex Realities

Traditional industrial robots (Pre-AI Robotics) are confined to the "structured cages" of factories: single-task (such as repetitive assembly), controllable environments (fixed production lines), and no need for perception and learning capabilities.

Morgan Stanley pointed out that the new generation of AI-enabled robots is breaking through these limitations and beginning to enter homes, farms, city streets, deep seas, and even space—such as autonomous vehicles navigating crowded roads, service robots grasping objects in homes, and drones inspecting complex terrains.

The report uses the example of "grasping a bottle from the refrigerator" to illustrate the challenges of the physical world:

What seems like a simple action for humans actually involves multiple variables such as precise finger positioning, body balance adjustment, grip control (too tight crushes, too loose drops), and the impact of environmental humidity on friction.

Morgan Stanley pointed out that this means robots must possess real-time perception, dynamic decision-making, and fine motor control capabilities, rather than relying solely on preset programs.

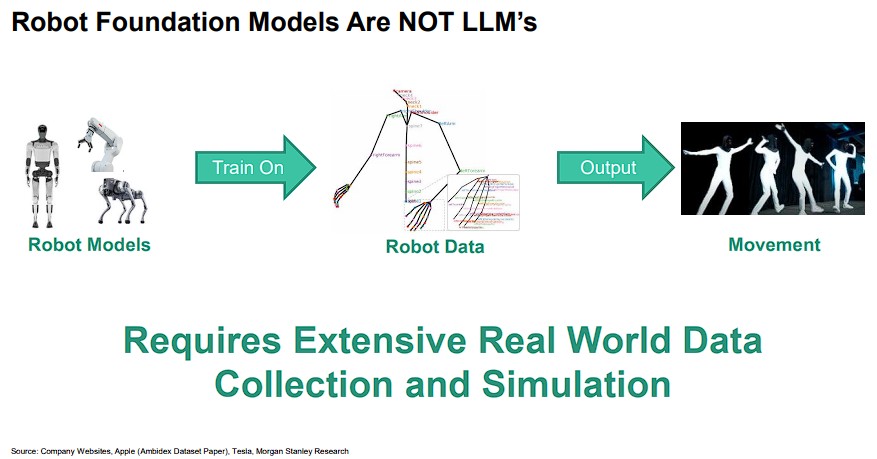

Shift in Training Paradigms: From "Brain" Optimization to "Body" Control

The report states that early robot training focused on the "brain" (AI models), such as optimizing general vision-language models (VLM). However, Morgan Stanley emphasizes that the current bottleneck has shifted to the "body" (physical action execution), with the core contradiction being: The basic skills that are instinctive for humans (such as walking and grasping) are extremely complex for AI (Moravec's paradox), and these skills cannot be easily learned through internet text/image data. According to Morgan Stanley's research, unlike large language models that primarily train on text and image data, robotic models require a large amount of real-world physical operation data, making data collection and model training more complex and expensive.

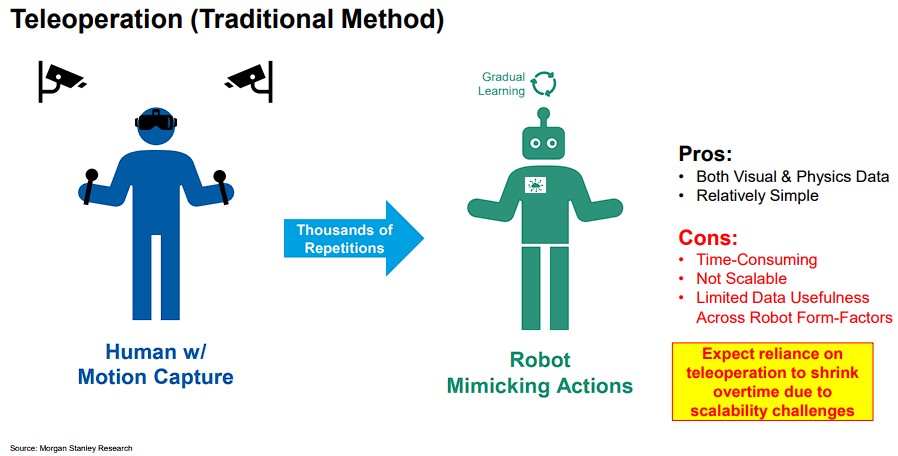

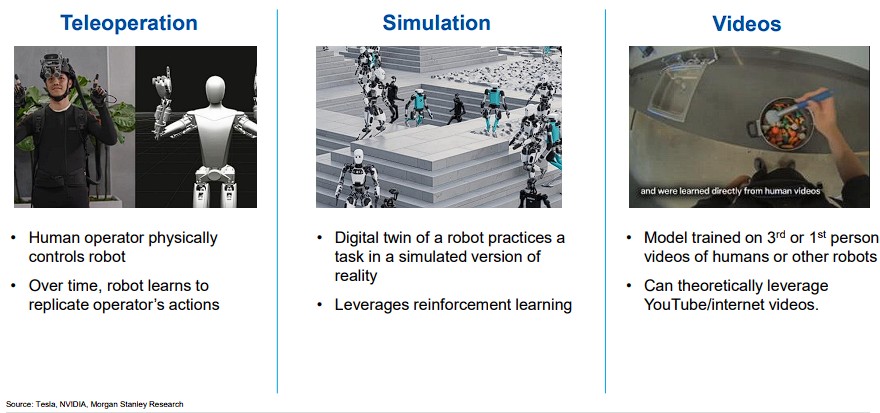

The firm pointed out that tech giants like Tesla, NVIDIA, and Google are collecting training data through three main methods: teleoperation, simulation training, and video learning.

Teleoperation: Humans control robots through motion capture to mimic behaviors. However, this method is time-consuming and has poor scalability, and may gradually be replaced in the future.

Simulation: Complex scenarios (such as extreme weather and obstacles) are infinitely reproduced in a virtual environment through digital twins, combined with reinforcement learning to optimize actions. Game engine companies (such as Unreal Engine and Unity) have been deeply involved, and NVIDIA's Omniverse platform is based on its gaming GPU technology accumulation.

Video Learning: Action patterns are extracted from human behavior videos (such as YouTube videos) to train models without physical interaction. Google's DeepMind's Genie 3, Meta's V-JEPA 2, and other "world models" adopt similar approaches to predict object motion trajectories and physical interaction results.

Surge in Edge Computing Demand: Real-time Inference and Distributed Computing

As robots "escape the factory," the latency issues of centralized cloud computing become prominent (such as the need for millisecond-level decision-making in autonomous driving), making edge computing a necessity. Morgan Stanley pointed out that edge computing will show two major trends:

1. Popularization of Dedicated Edge Chips

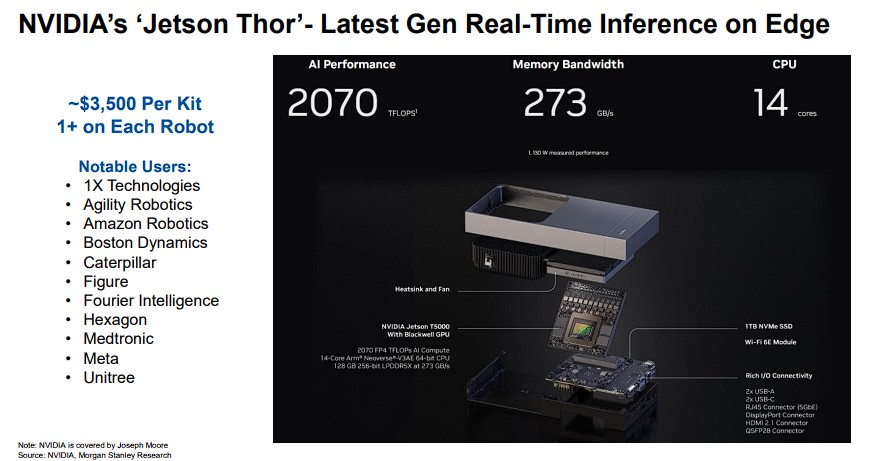

NVIDIA's Jetson Thor is a typical representative, serving as an edge real-time inference device, with each unit priced at about $3,500, already adopted by companies like Boston Dynamics and Amazon Robotics. Its core advantage lies in achieving high computing power under low power consumption, meeting the real-time requirements of robots (such as dynamic obstacle avoidance).

2. Distributed Inference Network

2. Distributed Inference Network

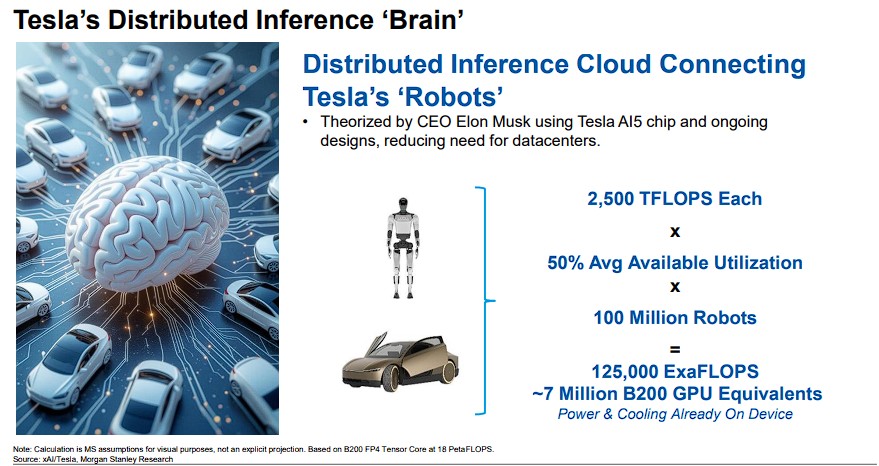

Tesla proposes the concept of "robots as computing power nodes": If 100 million robots with 2500 TFLOPS computing power are deployed globally, at 50% utilization, they could provide 125,000 ExaFLOPS of computing power, equivalent to 7 million NVIDIA B200 GPUs (each with 18 PetaFLOPS). This distributed model not only reduces reliance on data centers but also enhances overall efficiency through collaboration among robots.

According to Morgan Stanley's forecast, the global demand for robotic edge computing will significantly increase by 2030, with humanoid robots, autonomous vehicles, drones, and various other forms of robots contributing significantly to computing power demand. By 2050, 1.4 billion robots will be sold globally, driving the demand for edge AI computing power to the equivalent of millions of B200 chips