"World Model" competition upgraded: Runway launches GWM-1, real-time interaction can last for several minutes

Runway is attempting to evolve from a "special effects supplier" in the film industry to an "AI architect" in the physical world. The GWM-1 currently has three model variants: GWM-Worlds, GWM-Robotics, and GWM-Avatars. Among them, Worlds supports real-time environmental interaction and agent navigation training based on physical laws, Robotics is dedicated to generating synthetic data under extreme variables for robot strategy evaluation, and Avatars achieves long-duration human-like dialogue without quality loss through a unified audio-visual architecture

The battlefield of AI video is evolving from a simple competition of image quality to a competition of understanding the physical world.

On December 11, AI video generation unicorn Runway officially released its first General World Model (GWM-1), officially entering the "world simulation" battlefield led by giants like Google and NVIDIA.

Unlike traditional AI video generation models, GWM-1 is designed as a simulation system capable of understanding physical laws, geometric structures, and environmental dynamics. Its core breakthroughs lie in "coherence" and "interactivity."

Runway claims that based on its understanding of physical laws and environmental dynamics, the model can simulate the process of the world evolving over time through frame-by-frame prediction and supports coherent real-time interaction lasting several minutes.

Breakdown of GWM-1: From "Pixel Prediction" to "General Simulation"

The so-called "world model" refers to allowing AI to internally construct a simulation of the operating mechanisms of the real world, enabling it to possess reasoning, planning, and action capabilities without traversing all real-world scenarios.

"To build a world model, you first need to build a truly excellent video model," said Runway's Chief Technology Officer Anastasis Germanidis at the launch event. Teaching the model to directly predict pixels is the best path to achieving general simulation.

However, despite being labeled as "general," the current GWM-1 is actually a series of models composed of three autoregressive models (GWM-Worlds, GWM-Robotics, and GWM-Avatars) that have been fine-tuned for different domains, all built on Runway's latest Gen-4.5 base model.

Runway clearly states that its ultimate vision is to unify these different domains and action spaces into a single foundational world model.

(1) GWM-Worlds: An Interactive Digital Environment Exploration Interface

First, let's look at GWM-Worlds.

As mentioned earlier, GWM-Worlds is an autoregressive model fine-tuned on Runway's latest Gen-4.5 base model. This means it predicts the next frame using a frame-by-frame prediction approach.

In other words, at any moment, users can intervene based on the application scenario, such as moving in space, controlling robotic arms, or interacting with intelligent agents, and the model will simulate what happens next.

In the official demonstration materials, it can be seen that the model provides an interface for exploring digital environments, allowing users to set scenes through prompts or reference images, with the model generating environments at a frame rate of 24fps and a resolution of 720p.

Unlike traditional video generation, users can change the camera angle, environmental conditions, or object states in real-time, and the model can understand geometry and lighting, ensuring that the generated images maintain coherence over long sequences of motion In addition to game design previews and VR environment generation, the deeper significance of GWM-Worlds may lie in providing a training ground for AI Agents, teaching them how to navigate and act in the physical world.

(2) GWM-Robotics: Addressing the "data hunger" of embodied intelligence

If GWM-Worlds carries the genes of creative tools, then the launch of GWM-Robotics demonstrates Runway's ambition to enter the industrial and embodied intelligence fields.

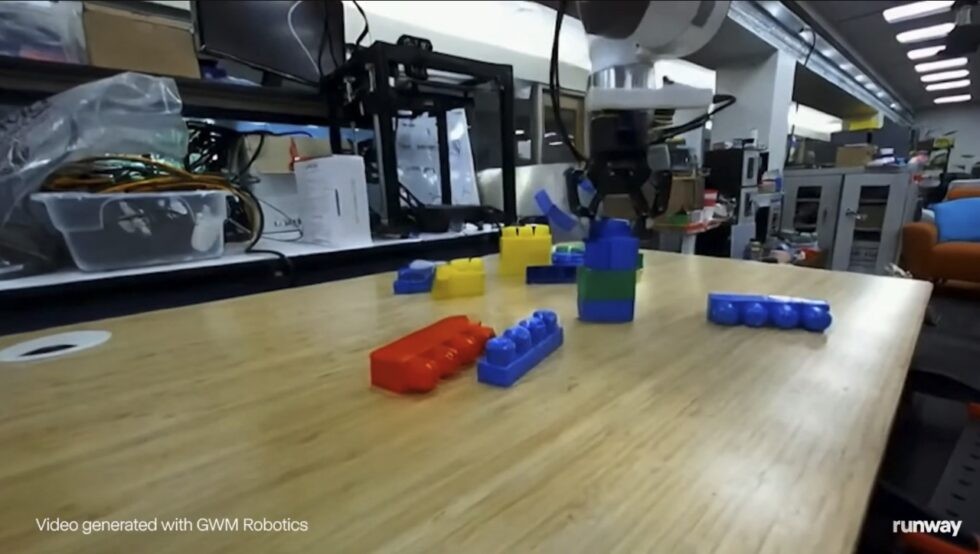

In robot research and development, acquiring real data for "long-tail scenarios" such as extreme weather and sudden obstacles is extremely costly. GWM-Robotics aims to address this pain point by generating high-quality "synthetic data" to simulate various environmental variables, helping robots conduct strategy assessments in virtual spaces. This not only significantly reduces training costs but also allows for the prediction of compliance risks before robots are deployed in the real world.

Runway has clearly stated that it is currently opening GWM-Robotics to some enterprises through an SDK and is actively engaging with several robotics companies. Clearly, Runway is attempting to develop new business for B-end industrial clients beyond simple SaaS subscription revenue.

(3) GWM-Avatars: A unified interactive terminal for video and voice

GWM-Avatars targets human-computer interaction. This is a unified model that combines video generation with voice, and Runway claims that its generated digital humans can engage in long, continuous conversations with no loss in image quality.

If the effectiveness of this technology is real and can be scaled, it may have a disruptive impact on customer service and online education industries.

Base Evolution and Computing Power Arms Race

While looking up to the "world model," Runway has not relaxed its consolidation of cash cow businesses and has also conducted defensive upgrades to its flagship video generation base to cope with competition from players like Kling.

The concurrently released Gen-4.5 model update addresses the shortcomings of native audio and multi-camera editing. The new version supports generating videos up to one minute long while maintaining character consistency, generating native dialogue and background sound effects, continuing its journey from "C-end toys" to "B-end productivity tools."

It is worth mentioning that to support the company's massive computing power needs as it transitions from creative generation to world simulation, Runway has also announced an agreement with cloud service provider CoreWeave According to Runway, it will utilize the Nvidia GB300 NVL72 rack on the CoreWeave cloud infrastructure for model training and inference in the future.

Conclusion

From film creative tools to robotic simulators, Runway's strategic landscape is rapidly expanding. However, in the new arena of world models, it no longer holds the first-mover advantage it had during the early days of video generation.

Faced with giants like Google and Nvidia, which have deep foundational resources and research accumulation, whether it can use GWM-1 to prove that it is not just a "special effects supplier" in the film industry, but capable of becoming an "AI architect" in the physical world, will be key to assessing whether its valuation can move to the next stage