Bank of America AI Deep Dive Report: Where are the computing power opportunities in the AI era?

美银指出,AI 模型训练所需的算力每 2 年涨 275 倍,下一代计算机包括高性能计算、边缘计算、空间计算、量子计算和生物计算。

作者:李笑寅

来源:硬 AI

在数据呈指数级增长的后摩尔时代,基于强大算力支持的 AI 技术正在蓬勃成长,同时对算力的要求也与日俱增。

随着 AI 训练和推理成本持续上升,LLM(大型语言模型)的参数数量已从 2018 年的 9400 万参数发展到商业上可用的 1750 亿参数的 GPT-3,预计 GPT-4 将超过 1 万亿。有数据显示,训练一个 AI 模型所需的算力将每 2 年涨 275 倍。

数据处理技术的进步推动了计算机演变,但传统的处理单元和大型计算集群无法突破计算复杂性的界限。摩尔定律虽然仍在进步和重生,但无法解释对更快、更强大的计算能力的需求。

英伟达 CEO 黄仁勋曾坦言:摩尔定律已死。伴随算力不断突破边界,AI 的机会在哪?

美银美林在 3 月 21 日发布的深度报告中指出,下一代计算机将包括:高性能计算(HPC)、边缘计算、空间计算、量子计算和生物计算。

高性能计算(HPC)

高性能计算是指使用超级计算机和并行计算机集群解决高级计算问题的计算系统。

报告指出,高性能计算系统通常比最快的桌面电脑、笔记本电脑或服务器系统快 100 万倍以上,在自动驾驶汽车、物联网和精准农业等已建立和新兴领域都有广泛的应用。

美银认为,高性能计算的发展趋势为超大规模系统(包括 LLM)加速器带来上升空间。

尽管高性能计算在数据中心总可用市场(TAM)中只占很小一部分(约 5% 的份额),但未来的发展趋势是成为云/企业应用的领先指标。

尤其是随着 LLM 对算力的需求越来越高,48 个新系统中有 19 个采用了加速器,代表了大约 40% 的加速器附加率。而对全球 500 强企业的调查显示,在超大规模服务系统中采用加速器有上升空间,目前只有大约 10% 的服务器是加速的。

美银指出,另一个趋势在于,借助于协处理器(协助 CPU 完成其无法执行或执行效率、效果低下的处理工作而开发和应用的处理器),计算方式将越来越多地从串行转向并行。

摩尔定律/串行计算的成熟正在将更多工作负载转移到并行计算上,这是通过使用独立的协处理器/加速器(如 GPU、定制芯片(ASIC)和可编程芯片(FPGA))实现的。

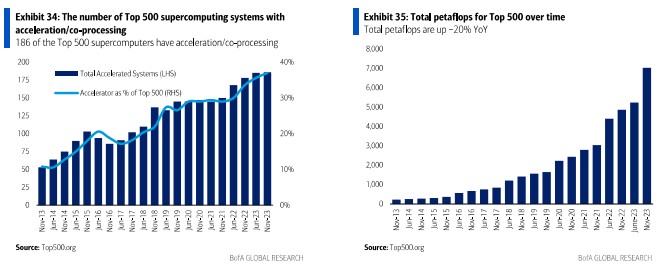

截至 2023 年 11 月,全球 500 强企业中有 186 台机器使用了协处理器,比五年前的 137 个系统有所增加;500 强中的协处理器/加速器使用在环比上持平,在同比上增长了约 5%;500 强超级计算机的总计算性能增长到了 7.0 exaflops,同比增长了 45%。

空间计算

空间计算是指通过使用 AR/VR 技术,将用户的图形界面融入真实物理世界,从而改变人机交互的计算机。

实际上,我们正在到达人机交互的一个转折点:从传统的键盘和鼠标配置转向触摸手势、对话式 AI 和增强视觉计算交互的边缘。

美银认为,继 PC 和智能手机之后,空间计算有潜力推动下一波颠覆性变革——使技术成为我们日常行为的一部分,用实时数据和通信连接我们的物理和数字生活。

比如苹果的 Vision Pro 就迈出了关键一步。

边缘计算

相对云端计算,边缘计算是指在物理位置上更靠近终端设备的位置处理数据,在延迟、带宽、自主性和隐私方面更具优势。据研究机构 Omdia,“边缘” 是指与最终用户的往返时间最多为 20 毫秒(毫秒)的位置。

美银表示,许多企业正在投资边缘计算和边缘位置(从内部 IT 和 OT 到外部、远程站点),以更接近最终用户和数据生成的地方。

Facebook、亚马逊、微软、谷歌和苹果这些科技巨头都在投资边缘计算,预计这种投资的回报将成为这些公司未来 5 年股票表现的驱动力。

预计到 2025 年,75% 的企业生成数据将在边缘创建和处理。

据研究机构 IDC 的数据,到 2028 年,预计边缘计算的市场规模将达到 4040 亿美元,2022-28 年复合年增长率为 15%。

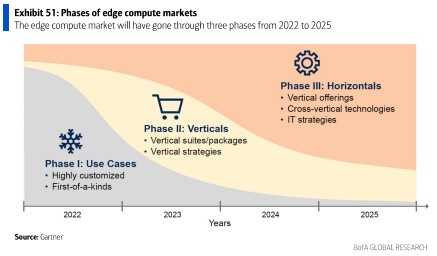

预计 2022-2025 年间,边缘计算市场的发展轨迹将大致如下:

第一阶段(2022 年):使用案例 - 高度定制化;第二阶段(2023 年):垂直领域 - 垂直套件/包;第三阶段(2024 年):水平领域 - 跨垂直技术;第四阶段(2025 年):IT 策略 - 垂直策略。

未来,美银认为,AI 的机会来自推理,对边缘计算推理来说,CPU 将是最佳选择。

与在核心计算中进行的训练不同,推理需要一个分布式、可扩展、低延迟、低成本的模型,这正是边缘计算模型提供的。当前边缘计算行业的分歧在于是否使用 CPU 或 GPU 来支持边缘推理。虽然所有主要供应商都支持 GPU 和 CPU 功能,但我们认为 CPU 是支持边缘推理的最佳选择。

在 GPU 模型下,一次只能处理 6-8 个请求。然而,CPU 能够按用户细分服务器,使其成为边缘上更有效的处理系统。相反,CPU 提供了成本效率、可扩展性和灵活性,并允许边缘计算供应商在计算过程中叠加专有软件。

雾计算

在边缘计算领域,还有一个引申分支概念:雾计算(Fog computering)。

雾计算是一种网络架构,是指使用终端设备在现场进行大量边缘计算时用于存储、通信、传输数据的架构。

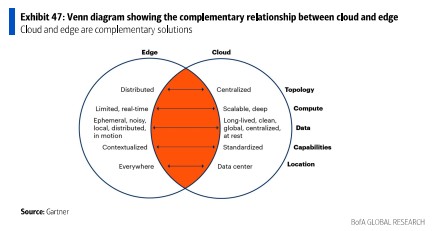

美银认为,雾计算和云计算之间是互补的关系,未来可能会形成混合/多云的部署业态。

随着应用程序迁移到云端,混合/多云方法正在被部署。云计算和边缘计算是互补的,采用分布式方法可以通过不同方法解决不同需求来创造价值。

IDC 的一项调查显示,42% 的企业受访者在设计和实施关键组件(包括基础设施、连接性、管理和安全)方面存在困难。从长远来看,边缘数据聚合和分析与云访问的规模化能力(如分析和模型训练)的结合,将创造一个建立在数字化边缘交互之上的新经济。

量子计算

量子计算是指利用亚原子粒子存储信息,并使用叠加进行复杂计算的计算。

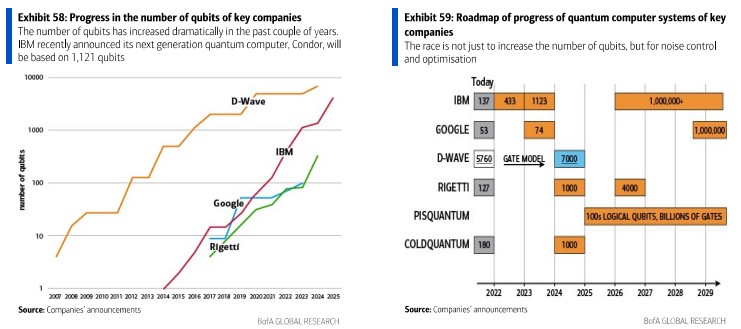

美银认为,量子计算的重要性在于,其在解决传统计算机无法解决的问题方面具有内在的不可替代优势——这也被称为 “量子霸权”。不过,目前量子计算的商业化进程还处于初级阶段。

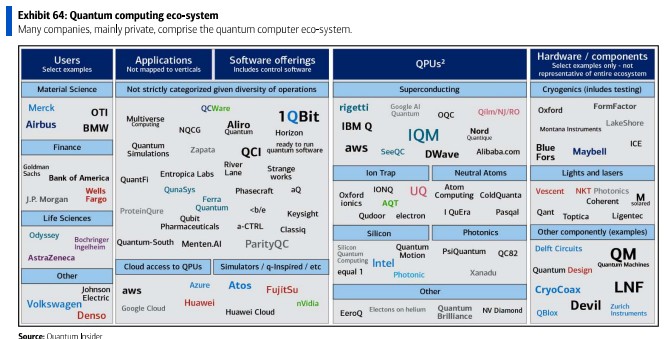

量子计算几乎可以瞬间解决传统计算机需要数十亿年才能解决的问题。我们正处于采用的非常早期阶段,只有少数几台部署在云上的机器作商用,主要用于研究。不过商业化进程正在迅速推进中。

美银认为,量子计算机打破了计算的边界,AI 和量子计算机两大最强技术的结合可以使得物理世界和数学世界发生根本性改变。

中短期看,生命科学、化学、材料、金融和物流行业受益最多。长期看,当 AI 达到人类认知能力甚至拥有自我意识时,通用人工智能(AGI)将导致技术发生根本性转变。

报告指出,量子计算机不适合像使用互联网、办公任务或电子邮件这样的常规任务,但适合像区块链、机器和深度学习或核模拟这样的复杂大数据计算。量子计算机和 6G 移动网络的结合将改变各行各业的游戏规则。

大数据分析:未开发大数据潜力巨大未开发大数据潜力巨大,预计到 2024 年创建的数据量将翻倍,从 2022 年的 120ZB 增加到 183ZB。

IDC 数据显示,目前,受算力瓶颈限制,我们只存储、传输和使用了全球数据的 1%。但量子计算可以改变这一点,并释放真正的经济价值——有望使用全球 24% 的数据并推动全球 GDP 翻番。

网络安全:通过每秒高达 1 万亿次计算的并行处理能力(Bernard Marr),量子计算能够在技术上挑战所有当前的加密方法,包括区块链。这也为基于量子计算元素的新加密技术打开了大门。

人工智能和机器学习: 机器学习和深度学习的进步受限于底层数据计算的速度。量子计算机可以通过使用更多数据更快地解决复杂数据点之间的连接来加速机器学习能力。

云: 这可能是赢家之一,因为云可能是所有数据创建、共享和存储发生的平台。一旦量子计算机的商业化开始,就需要云访问,数据生成应该指数级增长。因此,云平台将是解决方案。

自动驾驶车辆车队管理: 一辆连接的自动驾驶车辆将产生与 3000 名互联网用户相同的数据量;对两辆车而言,产生的数据量将跃升至约 8000-9000 名用户的数据量。因此,仅自动驾驶车辆产生的数据增长将是指数级的。

脑机接口

脑机接口是指通过人类和动物的脑波直接与外部世界互动。

美银指出,像 Neuralink 这样的初创公司正在研究通过植入物(BCI)实现人机合作,在动物实验环节已经实现了脑波控制设备,早期人类临床试验仍在进行中。

目前,脑机接口(BCI)和脑脑接口(CBI)技术正在开发中,已有通过思想控制手部动作的实例。

Synchron 的方案是在供应大脑的血管中放置一个布有传感器和电极的网格管,可以从中接收神经元信号,信号被传递到外部单元后,将被翻译并传达给计算机。临床试验中,瘫痪的个体能够发短信、发电子邮件,以及网上银行和购物。

Neural 的植入物包括神经线,这些线通过神经外科机器人插入大脑以拾取神经信号,临床患者现可以通过思考移动计算机鼠标。